Consumer Perspectives of Privacy and Artificial Intelligence

This resource analyzes how consumer perspectives of AI are shaped by the way emerging technologies affect their privacy.

Published: 14 Feb. 2024

New artificial intelligence tools, including virtual personal and voice assistants, chatbots, and large language models like OpenAI's ChatGPT, Meta's Llama 2, and Google's Bard and Gemini rare reshaping the human-technology interface. Given the ongoing development and deployment of AI-powered technologies, a concomitant concern for lawmakers and regulators around the world has been how to minimize their risks to individuals while maximizing their benefits to society. From the Biden-Harris administration's Executive Order 14110 to the political agreement reached on the EU's AI Act, governments around the world are taking steps to regulate AI technologies.

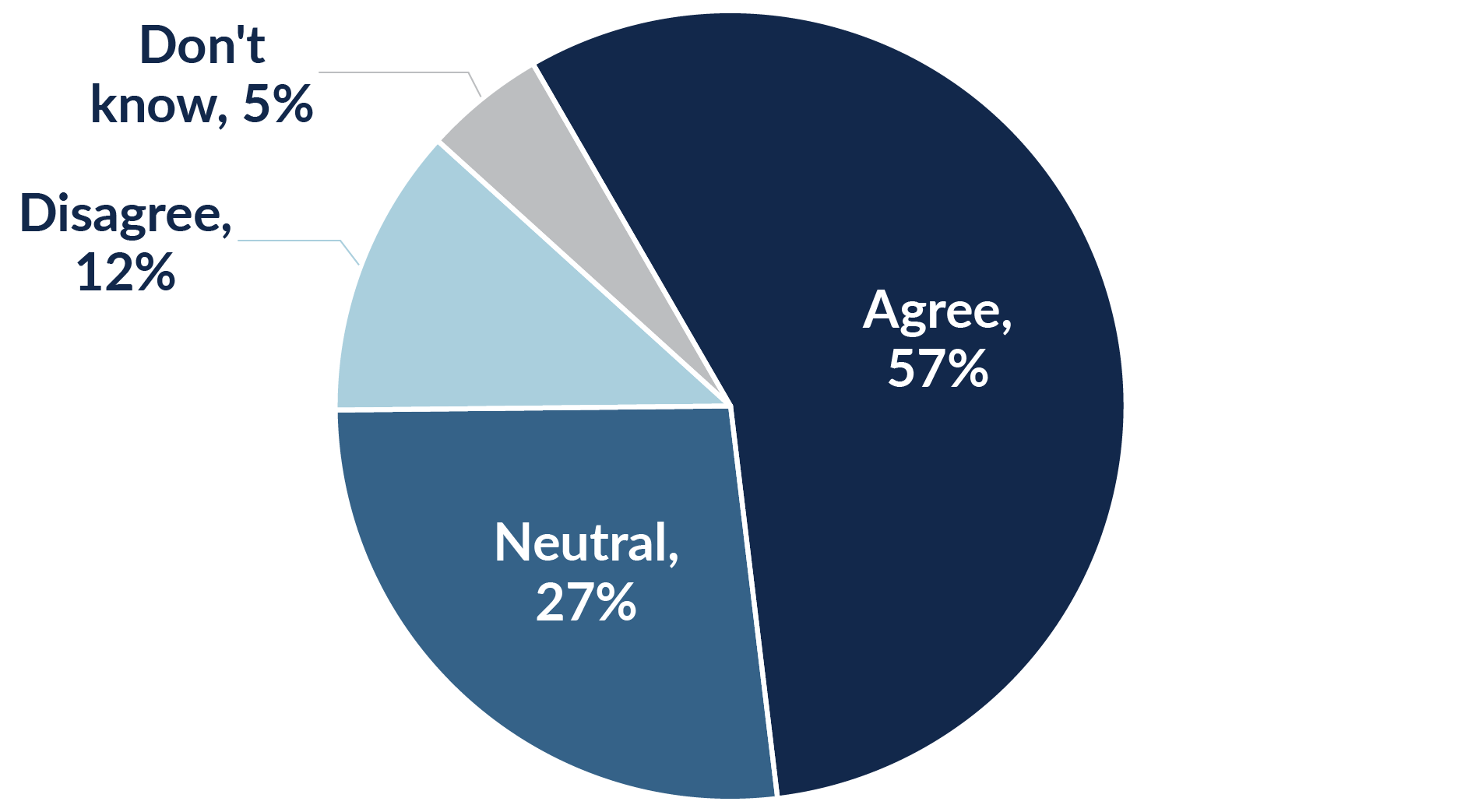

The popularization of generative AI tools, which learn from large quantities of data they scrape from the web, occurs as consumers are increasingly protective of their personal data. As revealed by the IAPP Privacy and Consumer Trust Report 2023, 68% of consumers globally are either somewhat or very concerned about their privacy online. Most find it difficult to understand what types of data about them are being collected and used. The diffusion of AI is one of the newest factors to drive these concerns, with 57% of consumers globally agreeing that AI poses a significant threat to their privacy.

The use of AI poses a significant threat to privacy

Similarly, a 2023 study carried out by KPMG and the University of Queensland found roughly three in four consumers globally feel concerned about the potential risks of AI. While most believe AI will have a positive impact in areas such as helping people find products and services online, helping companies make safer cars and trucks, and helping doctors to provide quality care, at 53% the majority also believe AI will make it harder for people to keep their personal information private.

Indeed, one of the public's biggest concerns related to AI is that it will have a negative effect on individual privacy. According to a recent Pew Research Center survey, 81% of consumers think the information collected by AI companies will be used in ways people are uncomfortable with, as well as in ways that were not originally intended. Another study in January 2024 by KPMG found 63% of consumers were concerned about the potential for generative AI to compromise an individual's privacy by exposing personal data to breaches or through other forms of unauthorized access or misuse.

Thus, consumer perceptions of AI are being shaped by their feelings about how these emerging technologies will affect their privacy. A wide range of IAPP and third-party studies have investigated the intersection of attitudes and knowledge around both AI and privacy issues across sectors — from financial technology to dating apps to wearable health care technologies. A synthesis of this literature provides insights into why protecting consumer privacy matters for organizations that develop and deploy AI tools. Ultimately, businesses and governments alike have central roles in shaping the foundational attitudes and trust upon which the digital economy is built. Being cognizant of consumer perspectives on privacy and AI, therefore, is of key importance across both the public and the private sector.

Consumers' privacy concerns

Far from the notion that "privacy is dead," research into privacy perceptions has consistently demonstrated "consumers fundamentally care about privacy and often act on that concern." Consumers globally are worried about the ubiquity of data collection and new uses of data by emerging technologies, including AI.

For example, a 2019 Ipsos survey found 80% of respondents across 24 countries expressed concern about their online privacy. As further evidence of these trends over time, Cisco's 2021 study of consumer confidence revealed nearly half of consumers, at 46%, do not feel they are able to effectively protect their personal data. Of these, the majority, at 76%, said this was because it is too hard to figure out what companies are doing with their data. In addition, 36% said it was because they do not trust companies to follow their stated policies. Similarly, a 2021 KPMG study found that four in 10 U.S. consumers do not trust companies to use their data in an ethical way, while 13% do not even trust their own employer.

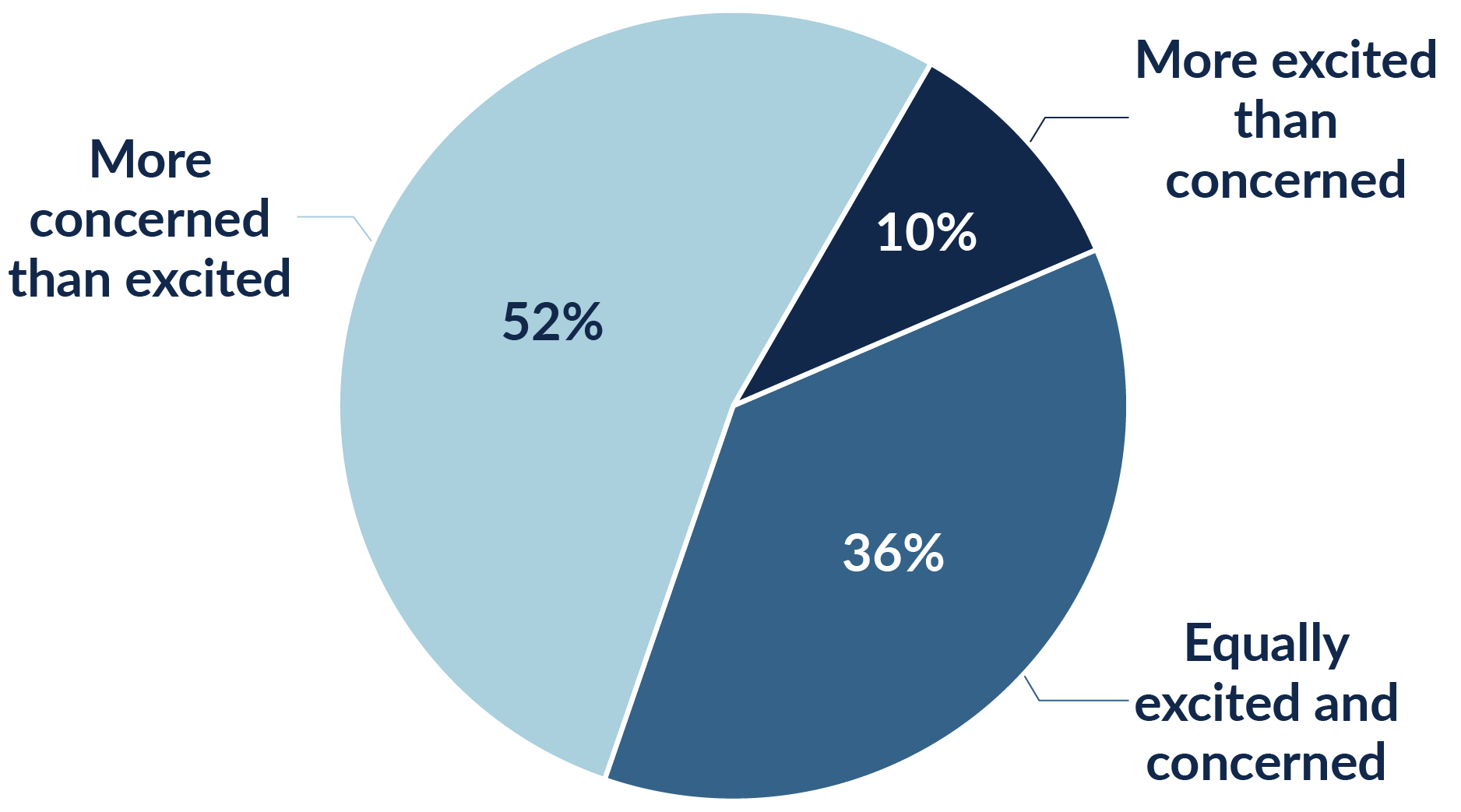

These privacy attitudes are showing signs of "spillage" into other relevant domains, namely AI. This is leading to greater apprehension by consumers as new technologies are introduced. For example, at 52% more U.S. adults are concerned, rather than excited, at 10%, about AI becoming embedded into their daily lives.

The complexity of privacy violations

The preferences and expectations of individuals with respect to how their data is collected and used are "complicated" — to say the least — and they vary based on things such as the purpose of the collection and analysis of data, the manner in which it is collected, and with whom it is shared. Put more simply, the purpose for which data is collected and used has a large effect on whether individuals perceive it as privacy invasive. For example, employees are less concerned when it comes to their employers collecting information that is directly related to their job duties. A KPMG study found only 24% of employees express concern about employers collecting data about their productivity, while only 17% are concerned about employers tracking when they start work. By contrast, more employees find it unacceptable for employers to view their social media accounts, at 44%, monitor their instant messaging, at 32%, or review their browsing history, at 32%.

Studies show a critical subsegment of consumers have become less willing to share their personal information and are engaging in what is known as privacy self-defense. Examples of this from the World Economic Forum include "withholding personal information, giving false biographical details or removing information from mailing lists altogether." It's also important to keep in mind that different individuals and groups may respond differently to the risks of data collection, as "not everybody is equally comfortable with sharing their data." Trust in the financial sector, for instance, varies by racial and age groups, with racial minorities less likely to trust financial institutions to process their data and younger respondents more likely to do so. The nature of an individual's privacy concerns can also vary by gender, with women more concerned with data sharing implications that pertain to their physical safety.

As legal scholars Danielle Keats Citron and Daniel J. Solove explain in their article, privacy violations have a variety of origins. They can stem from a sense that a promise regarding one's data has been broken, i.e., about how it will be collected, used and/or disclosed. Privacy harms may also be brought about by floods of unwanted advertising and spam. They may also occur when an individual's expectations of which third-parties their data will be shared with are thwarted, leading to some kind of data-related detriment.

Similarly, as the World Economic Forum points out in its Global Risks Report, loss of control over one's data can lead to a variety of privacy harms, from anxiety about personal attacks, fraud, cyberbullying and stalking, to a lack of agency as well as apathy over one's ability to secure one's data. In a Norton and Harris Poll survey of individuals who recently detected unauthorized access to their accounts, the most common emotions they experienced were anger, stress and vulnerability. Feeling violated, scared, powerless and embarrassed were also common in the aftermath of such cybercrimes.

Rather than magnitude or severity, what makes privacy harms so damaging to individuals and society overall is the sheer frequency of their occurrence. As Citron and Solove further explain:

"For many privacy harms, the injury may appear small when viewed in isolation, such as the inconvenience of receiving an unwanted email or advertisement or the failure to honor people's expectation that their data will not be shared with third parties. But when done by hundreds or thousands of companies, the harm adds up. Moreover, these small harms are dispersed among millions (and sometimes billions) of people. Over time, as people are each inundated by a swarm of small harms, the overall societal impact is significant."

—Danielle Keats Citron and Daniel J. Solove

Thus, decades of privacy harm from all sides — from cybercriminals to government surveillance programs to data-hungry private actors — have decreased the public's trust in the collection and processing of personal data. Indeed, numerous threats, including errant data collection and use, lack of satisfactory legal and policy solutions and regular emergence of new privacy-invasive technologies, are enhancing consumers' privacy concerns. As time passes, these privacy-related attitudes become entrenched behaviors rooted in distrust of data collection that generate severe and tangible consequences for the global digital economy. With the incorporation of data-driven AI tools into a growing array of business applications — from customer service to marketing to fintech and telehealth — consumer privacy concerns will continue to rise.

Against this global background of growing privacy concerns, the following sections explore the dynamics of trust among consumers for the use of AI in business.

Consumer trust in AI

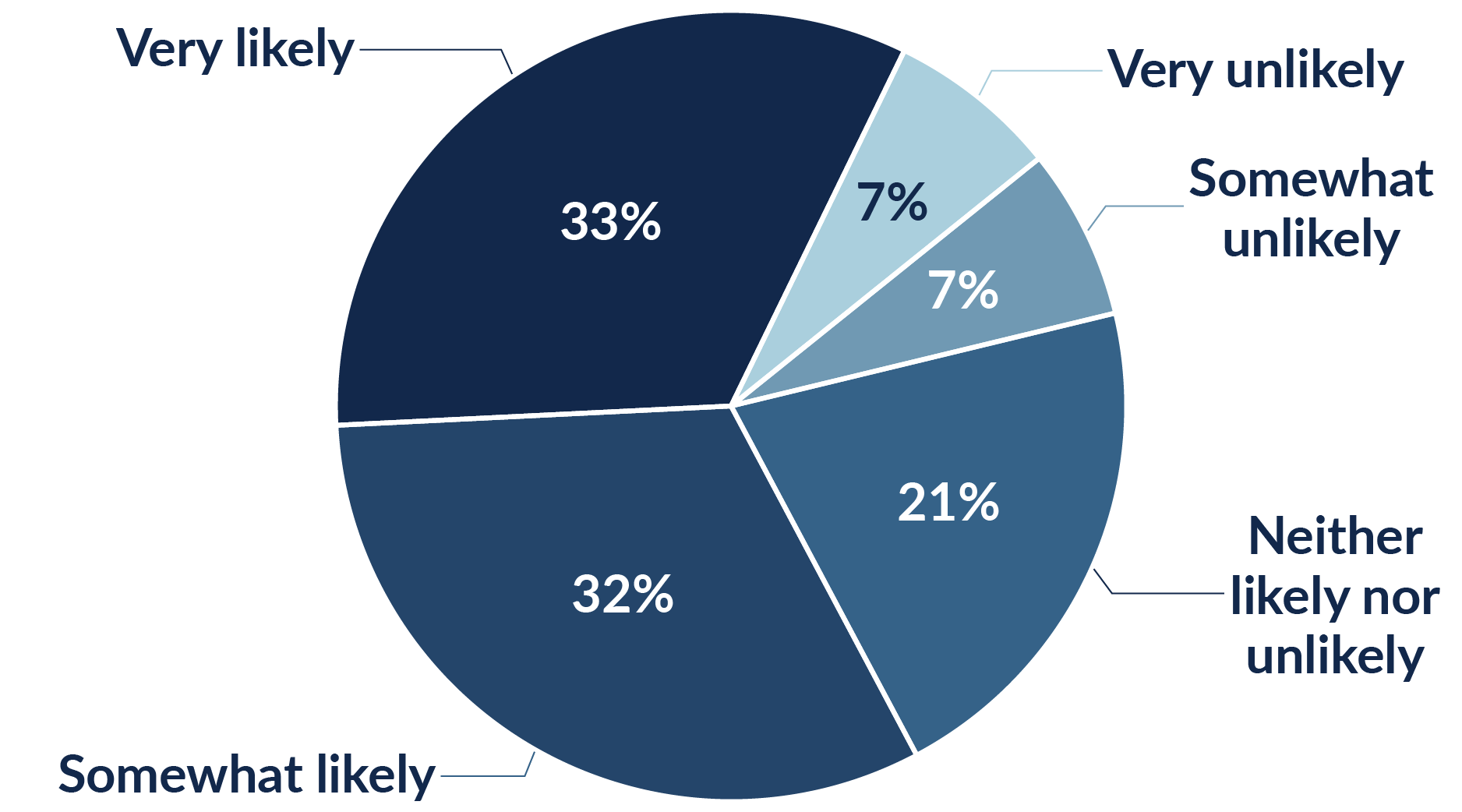

In general, findings about how much trust consumers put into organizations that use AI technologies are inconclusive. For example, according to a survey published by Forbes Advisor, when asked in general if they would trust a business that uses AI, the majority of respondents, 65%, reported they are either somewhat or very likely to impart their trust. Yet, about one in seven respondents, or 14%, reported they would be either somewhat or very unlikely to trust businesses that use AI. An additional one in five, or 21%, were on the fence, neither likely nor unlikely to trust businesses using AI.

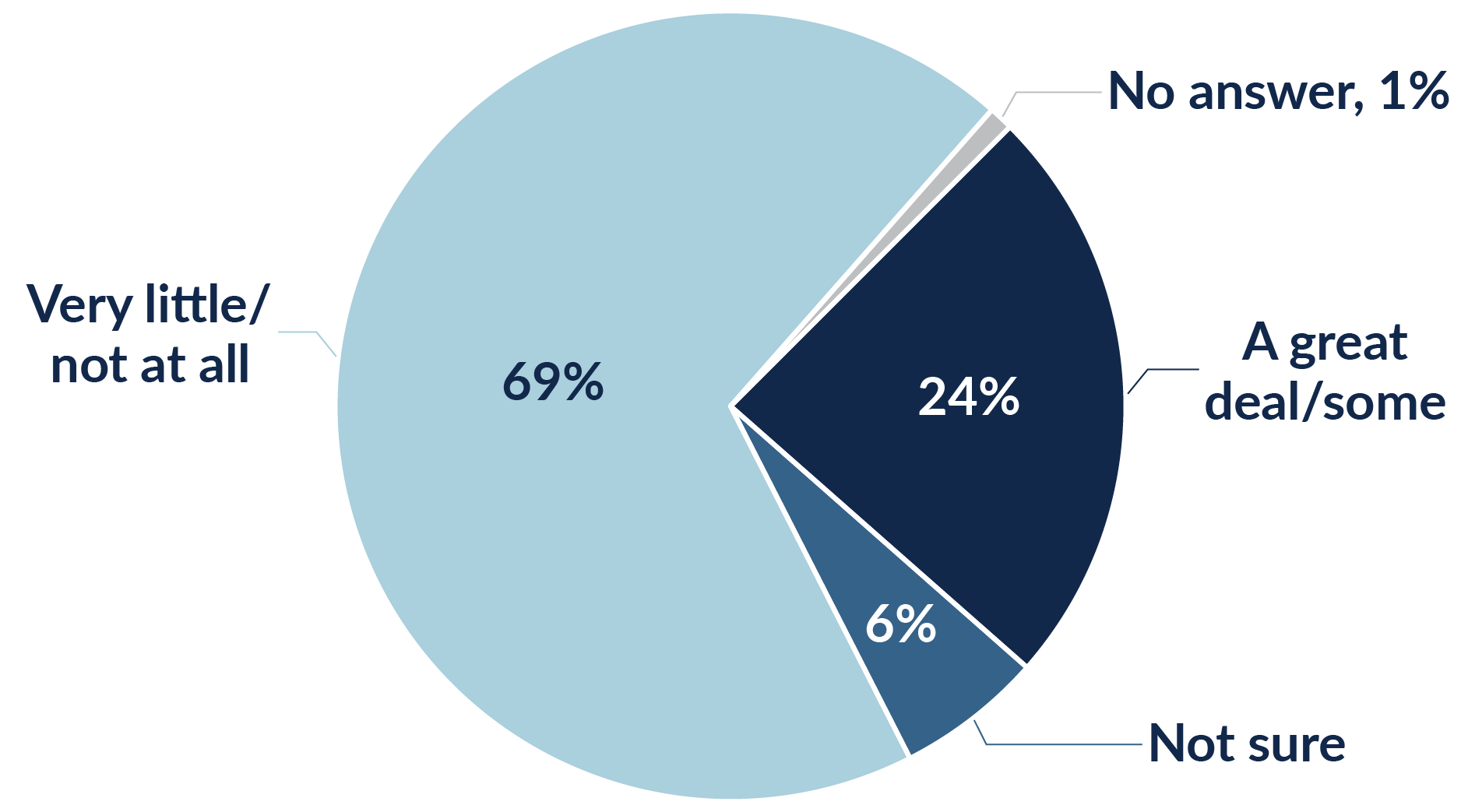

Yet, a Pew Research survey conducted in May 2023 found much higher levels of distrust. Among Americans who had heard of AI, 70% said they had very little or no trust at all in companies to use AI responsibly. About a quarter, or 24%, said they had some or a great deal of trust in them, while about 6% were unsure.

Given the rapidity of AI's technological development and deployment, unsurprisingly, many people have not yet made up their minds about it. Voice recognition data is now being used to improve voice translators, health data from fitness apps is being used for medical research and facial recognition data is being used to grant access to a one's financial information. Still, about one in four U.S. adults express uncertainty over whether these use cases are acceptable.

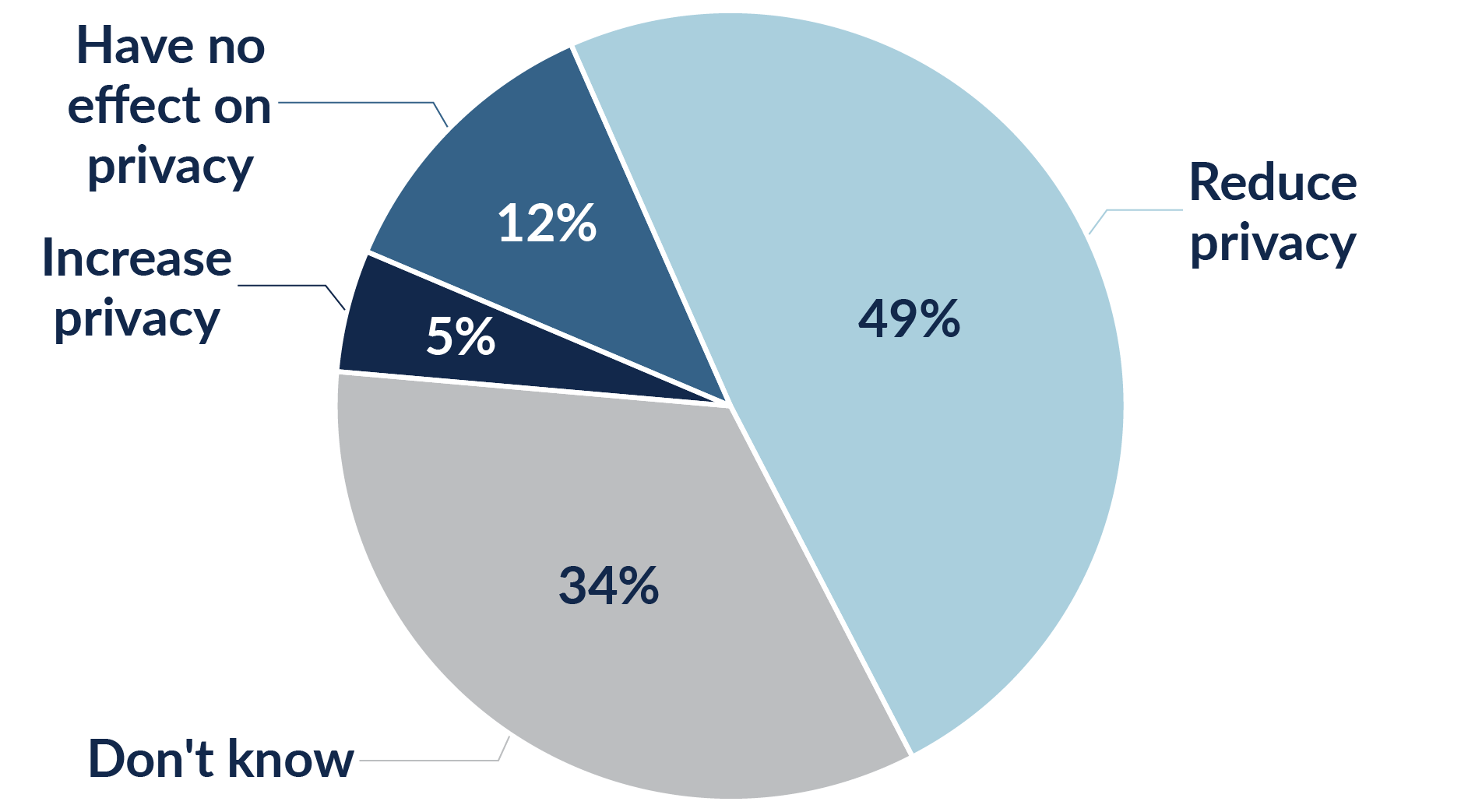

Indeed, some consumers remain wary of the consequences of AI for privacy. In a 2018 survey conducted by the Brookings Institution, 49% of respondents thought AI would lead to a reduction in privacy. About 12% thought it would have no effect, while 5% believed AI would enhance privacy. But, again, a sizeable number of respondents expressed uncertainty about the future of AI, with 34% saying they did not know whether AI would affect privacy.

Research has made progress in exploring the context- and technology-specific factors that affect whether consumers trust AI with their data. The following sections examine how consumer privacy perceptions regarding the use of AI varies across consumer-centric contexts and domains.

Perceived privacy risks of AI

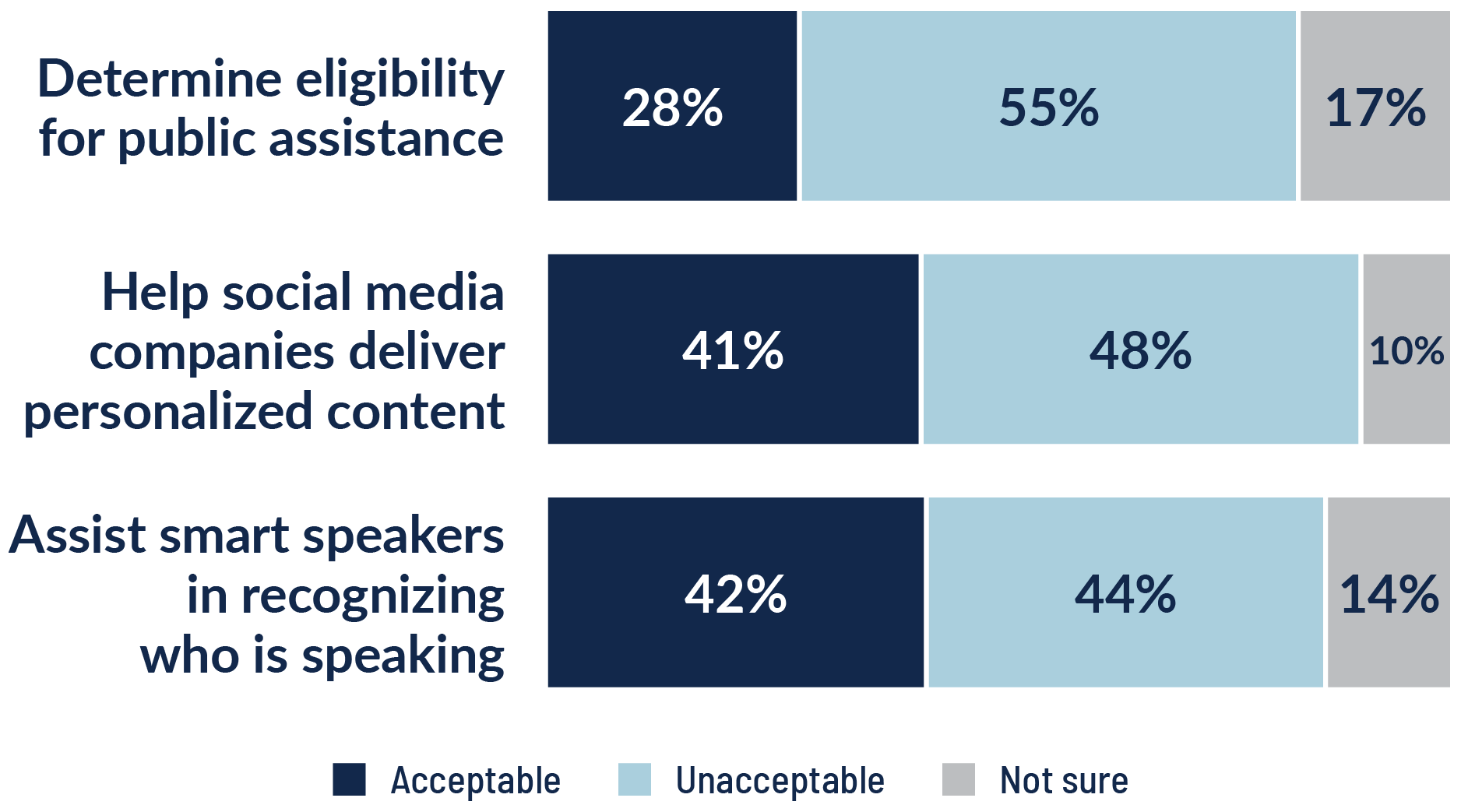

While general trust in AI remains high, several recent studies on consumer reception of AI tools found their acceptance to be strongly tied to the industry and/or the type of data involved. For example, a 2023 Pew Research study revealed people were more or less split on the acceptability of social media companies using AI to analyze what people do on their sites and deliver personalized content, or for smart speakers using AI to assist in the recognition of the speaker's identity. In contrast, a clear majority of people found it unacceptable for AI to be used to determine a person's eligibility for public assistance. In other words, not all uses of AI are equal in the public's mind.

A similar study found 48-55% of respondents, were comfortable with AI being used to analyze their social media use and engagement, purchasing habits and driving behavior, but smaller portions were comfortable with AI being used to analyze their text messages or phone conversations, at 33% and 21% respectively.

To further explore how privacy perceptions related to AI vary across industries and types of data, the next sections take a deep dive into the dynamics of privacy and trust in AI across personal voice assistants, smart devices, health apps, self-driving cars, online data apps, fintech and in the workplace.

The uptake of personal voice assistants has been more limited in the segment of consumers with greater privacy concerns. According to one study, about 7% of people who do not use voice assistants said privacy is their primary reason for not wanting them. Consumers who were the most adamant in their refusal to adopt an intelligent personal assistant, such as Apple's Siri, Amazon's Alexa or Google's Assistant, were more likely than others to harbor concerns about data privacy and security. Similarly, a poll of digital assistant use in the U.K. found 15% of those who do not own a smart device think digital assistants are "creepy."

Even among consumers who use personal voice assistants, privacy concerns linger. A study by PwC found 40% of the people who use voice assistants still have concerns about what happens to their voice data. Another study showed, upon learning of the "always listening" feature of these devices, privacy concerns increased even among individuals who previously expressed no privacy concerns with these devices at all.

Smart devices in the home have also been a particular source of privacy-related fears and anxiety. A 2021 YouGov survey found that a majority, approximately 60-70%, of U.K. adults believe it is very or fairly likely that their smartphones, smart speakers and other smart home devices are listening to their conversations unprompted. In addition, 22% believe they have been served targeted advertisements due to an eavesdropping smart device.

News reports indicate these fears are not entirely unfounded. Researchers have documented smart speaker misactivations, or instances when a device "awakens" even when a wake word is not spoken. One study observed that, given a stream of conversation in the background, smart speakers misactivate about once per hour, with these durations lasting as long as 10 seconds or more. Moreover, makers of smart devices have been reported to employ "human helpers" to review voice and video recordings in their efforts to improve the software and algorithms that underlie these technologies.

And when it comes to AI-driven Internet of Things devices that do not record audio or video — such as smart lightbulbs and thermostats, which may use machine learning algorithms to infer sensitive information including sleep patterns and home occupancy — users remain mostly unaware of their privacy risks. From using inexpensive laser-pointers to hijack voice assistants to hacking into home security cameras, cybercriminals have also been able to infiltrate homes through the security vulnerabilities in smart devices.

Overall, there is a complicated relationship between use of AI-driven smart devices and privacy, with users sometimes willing to trade privacy for convenience. At the same time, given the relative immaturity of the privacy controls on these devices, users remain stuck in a state of "privacy resignation."

Another emergent AI-based technology that many consumers believe poses a threat to privacy is the self-driving or autonomous vehicle. At their core, these automotive devices require massive amounts of visual and other information for their dozens of cameras and sensors to function properly. Given that AVs engage in continuous recording of their surroundings, law enforcement authorities have also shown interest in the street-level data they collect to aid in criminal investigations.

The main privacy concerns individuals have with AVs include not only their ability to engage in location tracking and surveillance but their diminishment of human autonomy — a core aspect of privacy. The very name autonomous vehicle implies an autonomy outside the purview of humans, and, as researchers Kanwaldeep Kaur and Giselle Rampersad pointed out, "This loss of autonomy extends to the loss of privacy." Indeed, a recent Eurobarometer study found about one in five, or 21%, of Europeans agree automated and connected vehicles will pose a threat to privacy. Moreover, 23% of Europeans believe automated and connected vehicles will become a new target for cyberattacks.

Research has also demonstrated that privacy concerns play a critical role in influencing consumers' trust in and adoption of AVs. Kaur and Rampersad found when consumers had fewer privacy concerns regarding AVs, it a positive effect on their trust in them, which in turn led to greater adoption. Another interesting strand of research into trust of AVs showed that their anthropomorphism, or embodiment of human features within the technology, increases human trust in their competency.

Entrusting an algorithm to predict love is perhaps the ultimate show of faith in technology. But users of dating apps and websites still harbor significant privacy concerns about how their personal data is used. Among the 30% of U.S. adults who have ever used a dating app or site, 57% report being concerned with how much data these services collect about them, according to Pew Research. These concerns are not unfounded: A premise of online dating is that one should share significant amounts of intimate personal information with strangers to form a desired connection. Indeed, the privacy paradox — whereby one's privacy preferences appear out-of-sync with one's data sharing behaviors — is perhaps most salient in the online dating context.

More importantly, the populations that are more likely to try online dating — 53% of 18-29 year olds and 51% of LGBT adults, as of 2022 — may disproportionately be exposed to their privacy risks. Given the nature of the type of sensitive information these sites and apps collect, i.e., "swipe history," sexual orientation data, explicit photos, and even HIV status and most recent testing date, users can be at risk of "blackmail, doxing, financial loss, identity theft, emotional or reputational damage, revenge porn, stalking, or more." Research has also shown older adults, women, those who have had negative experiences with online dating and those who believe it has a mostly negative impact on dating and relationships are more likely to be concerned about data collection on dating websites and apps.

Perhaps no applications of AI are as consequential as those within the health care domain. Health apps and telehealth have transformed consumers' experiences with health care systems and with their own health data. Yet, the digitization of health care delivery has brought about new privacy risks and data-related anxieties for patients. In a 2021 AP poll, 18% of respondents said they were very or somewhat concerned about a lack of privacy when receiving health care at home through telehealth services — and this concern was greatest among nonwhite adults and adults without a college degree. Indeed, more than half, or 52%, of telehealth providers have seen cases where a patient has refused a video call with medical staff due to privacy and data security concerns.

While working from home, especially during the onset of the COVID-19 pandemic, clinical staff have also expressed their own reservations about their ability to maintain the privacy and confidentiality of patient data revealed during telehealth visits. A Kaspersky study found only about three in ten global health care providers are very confident in their ability to prevent cyberattacks, while four in ten said clinicians lack necessary insight into how patient data is protected in telehealth settings.

Other home telehealth devices, such as sensors designed to detect falls, may collect additional data on activities occurring within the household, including times of home occupancy. More recently, menstruation-tracking apps, which have user bases in the hundreds of millions, have aroused health-related privacy concerns globally. A report by Privacy International demonstrated how these apps automatically share "extensive and deeply personal sensitive data with third parties, including Facebook."

At the same time, a desire to improve health outcomes often outweighs consideration of other risks, including privacy-related ones. For example, compared to healthy individuals, patients with chronic conditions tend to be less concerned with technology use in health care management and privacy. One study found "for most people, the convenience of rapid access to information and communication with clinicians outweighed privacy concerns." A study of 20 mature adults who used smart speakers and mobile health apps found that, although they feel a need to protect their data privacy, "they accept the risk of misuse of their private data when using the technology." Similarly, researchers in Germany have shown that when algorithms are used in health care decision-making, individuals are "willing to trade transparency … for small gains in effectiveness." That is, individuals are unlikely to demand accountability from algorithms when efficiency gains can be had, even in sensitive areas such as public health.

In the world of finance, privacy risks are tangible. The resultant harms are not reputational or emotional but monetary. This explains why global regulators have been paying particular attention to the risks of unfair or discriminatory decision-making resulting from the use of AI systems that can affect individuals' eligibility for certain financial services and benefits.

Financial data portability, sharing sensitive personal data with third parties, data broker requirements and use of AI chatbots by financial services entities are a few more financial privacy-related issues that have been on regulators' radar. Indeed, the Biden-Harris administration listed financial services as one of the "critical fields" in its Executive Order 14110 on AI.

The stakes of financial data protection may explain why consumers find themselves in somewhat of a state of "cognitive dissonance" when it comes to their general attitudes toward the use of AI in banking. In a U.K. focus group study, participants were both eager to use AI-driven financial services for their perceived convenience and benefits, while simultaneously feeling dislike, distrust or concern about those services and their negative impact on society. Given that nearly seven in ten finance organizations are using or planning to use AI, consumer attitudes about privacy and AI will remain critically important in this space.

From worries about jobs being replaced by AI to the complete absence of privacy at work, AI is also having a significant effect on the workplace. The American Psychological Association's 2023 Work in America Survey found that concerns about AI and monitoring technologies in the workplace are already having a negative impact on workers' psychological and emotional well-being and productivity. Those who are worried about AI making some or all job duties obsolete are much more likely to feel stressed, burnt out, less productive, unmotivated, emotionally exhausted and ineffective at work. Worries about AI, which are held by about 38% of workers overall, and even higher percentages of younger workers between the ages of 18-25 and 26-43, less educated workers and people of color, are also associated with higher intentions to look for a new job.

This cross-sectoral analysis shows that consumers expect AI to impact everything from how we behave in the "privacy of our own homes" to how we get from point A to point B, from finding love to improving one's health and finances and from emotional well-being to productivity at work. Indeed, so much is at stake for consumers as AI becomes more embedded into our daily lives.

Key takeaways

Unpacking consumers' attitudes toward privacy and AI is complicated, and they defy simple classification. Yet, some key takeaways can be derived from an analysis of recent studies into consumer perceptions at the intersection of privacy and AI:

Most consumers are excited about the benefits AI offers in terms of efficiency, particularly in the delivery of crucial services such as health care and finance.

Simultaneously, most consumers are concerned about the risks AI presents in general, as well as about the risks to privacy in particular.

Also, anywhere from one quarter to one third of consumers remain uncertain or undecided on whether AI will affect their privacy.

Overall, then, consumers have mixed feelings about the privacy risks of AI-driven technologies. All at once, they are excited by their promise, concerned about their risks and uncertain about what AI means for their autonomy and privacy. Importantly, for many consumers, the jury is still out on AI. This substantial segment of uncertain consumers presents an opportunity for organizations to be transparent and provide clearer, more relevant information to all consumers, most of whom would like a better picture of how their personal data is being used and how their privacy will be affected by AI.

It is all but certain that consumers' privacy concerns are on the rise and that AI is becoming one of the main forces driving them. Greater awareness of consumer perceptions at the intersection of privacy and AI fills an important gap in the understanding of what consumers think about a rapidly advancing technology that is disrupting an already fast-moving, data-fueled global economy. Ultimately, organizations that develop and build AI into their products should account for how consumers perceive risks to their privacy, which will foster trust in and enhance the uptake of these new technologies.

This content is eligible for Continuing Professional Education credits. Please self-submit according to CPE policy guidelines.

Consumer Perspectives of Privacy and Artificial Intelligence

This resource analyzes how consumer perspectives of AI are shaped by the way emerging technologies affect their privacy.

Published: 14 Feb. 2024

Contributors:

Müge Fazlioglu

CIPP/E, CIPP/US

Principal Researcher, Privacy Law and Policy

IAPP

New artificial intelligence tools, including virtual personal and voice assistants, chatbots, and large language models like OpenAI's ChatGPT, Meta's Llama 2, and Google's Bard and Gemini rare reshaping the human-technology interface. Given the ongoing development and deployment of AI-powered technologies, a concomitant concern for lawmakers and regulators around the world has been how to minimize their risks to individuals while maximizing their benefits to society. From the Biden-Harris administration's Executive Order 14110 to the political agreement reached on the EU's AI Act, governments around the world are taking steps to regulate AI technologies.

The popularization of generative AI tools, which learn from large quantities of data they scrape from the web, occurs as consumers are increasingly protective of their personal data. As revealed by the IAPP Privacy and Consumer Trust Report 2023, 68% of consumers globally are either somewhat or very concerned about their privacy online. Most find it difficult to understand what types of data about them are being collected and used. The diffusion of AI is one of the newest factors to drive these concerns, with 57% of consumers globally agreeing that AI poses a significant threat to their privacy.

The use of AI poses a significant threat to privacy

Similarly, a 2023 study carried out by KPMG and the University of Queensland found roughly three in four consumers globally feel concerned about the potential risks of AI. While most believe AI will have a positive impact in areas such as helping people find products and services online, helping companies make safer cars and trucks, and helping doctors to provide quality care, at 53% the majority also believe AI will make it harder for people to keep their personal information private.

Indeed, one of the public's biggest concerns related to AI is that it will have a negative effect on individual privacy. According to a recent Pew Research Center survey, 81% of consumers think the information collected by AI companies will be used in ways people are uncomfortable with, as well as in ways that were not originally intended. Another study in January 2024 by KPMG found 63% of consumers were concerned about the potential for generative AI to compromise an individual's privacy by exposing personal data to breaches or through other forms of unauthorized access or misuse.

Thus, consumer perceptions of AI are being shaped by their feelings about how these emerging technologies will affect their privacy. A wide range of IAPP and third-party studies have investigated the intersection of attitudes and knowledge around both AI and privacy issues across sectors — from financial technology to dating apps to wearable health care technologies. A synthesis of this literature provides insights into why protecting consumer privacy matters for organizations that develop and deploy AI tools. Ultimately, businesses and governments alike have central roles in shaping the foundational attitudes and trust upon which the digital economy is built. Being cognizant of consumer perspectives on privacy and AI, therefore, is of key importance across both the public and the private sector.

Consumers' privacy concerns

Far from the notion that "privacy is dead," research into privacy perceptions has consistently demonstrated "consumers fundamentally care about privacy and often act on that concern." Consumers globally are worried about the ubiquity of data collection and new uses of data by emerging technologies, including AI.

For example, a 2019 Ipsos survey found 80% of respondents across 24 countries expressed concern about their online privacy. As further evidence of these trends over time, Cisco's 2021 study of consumer confidence revealed nearly half of consumers, at 46%, do not feel they are able to effectively protect their personal data. Of these, the majority, at 76%, said this was because it is too hard to figure out what companies are doing with their data. In addition, 36% said it was because they do not trust companies to follow their stated policies. Similarly, a 2021 KPMG study found that four in 10 U.S. consumers do not trust companies to use their data in an ethical way, while 13% do not even trust their own employer.

These privacy attitudes are showing signs of "spillage" into other relevant domains, namely AI. This is leading to greater apprehension by consumers as new technologies are introduced. For example, at 52% more U.S. adults are concerned, rather than excited, at 10%, about AI becoming embedded into their daily lives.

The complexity of privacy violations

The preferences and expectations of individuals with respect to how their data is collected and used are "complicated" — to say the least — and they vary based on things such as the purpose of the collection and analysis of data, the manner in which it is collected, and with whom it is shared. Put more simply, the purpose for which data is collected and used has a large effect on whether individuals perceive it as privacy invasive. For example, employees are less concerned when it comes to their employers collecting information that is directly related to their job duties. A KPMG study found only 24% of employees express concern about employers collecting data about their productivity, while only 17% are concerned about employers tracking when they start work. By contrast, more employees find it unacceptable for employers to view their social media accounts, at 44%, monitor their instant messaging, at 32%, or review their browsing history, at 32%.

Studies show a critical subsegment of consumers have become less willing to share their personal information and are engaging in what is known as privacy self-defense. Examples of this from the World Economic Forum include "withholding personal information, giving false biographical details or removing information from mailing lists altogether." It's also important to keep in mind that different individuals and groups may respond differently to the risks of data collection, as "not everybody is equally comfortable with sharing their data." Trust in the financial sector, for instance, varies by racial and age groups, with racial minorities less likely to trust financial institutions to process their data and younger respondents more likely to do so. The nature of an individual's privacy concerns can also vary by gender, with women more concerned with data sharing implications that pertain to their physical safety.

As legal scholars Danielle Keats Citron and Daniel J. Solove explain in their article, privacy violations have a variety of origins. They can stem from a sense that a promise regarding one's data has been broken, i.e., about how it will be collected, used and/or disclosed. Privacy harms may also be brought about by floods of unwanted advertising and spam. They may also occur when an individual's expectations of which third-parties their data will be shared with are thwarted, leading to some kind of data-related detriment.

Similarly, as the World Economic Forum points out in its Global Risks Report, loss of control over one's data can lead to a variety of privacy harms, from anxiety about personal attacks, fraud, cyberbullying and stalking, to a lack of agency as well as apathy over one's ability to secure one's data. In a Norton and Harris Poll survey of individuals who recently detected unauthorized access to their accounts, the most common emotions they experienced were anger, stress and vulnerability. Feeling violated, scared, powerless and embarrassed were also common in the aftermath of such cybercrimes.

Rather than magnitude or severity, what makes privacy harms so damaging to individuals and society overall is the sheer frequency of their occurrence. As Citron and Solove further explain:

"For many privacy harms, the injury may appear small when viewed in isolation, such as the inconvenience of receiving an unwanted email or advertisement or the failure to honor people's expectation that their data will not be shared with third parties. But when done by hundreds or thousands of companies, the harm adds up. Moreover, these small harms are dispersed among millions (and sometimes billions) of people. Over time, as people are each inundated by a swarm of small harms, the overall societal impact is significant."

—Danielle Keats Citron and Daniel J. Solove

Thus, decades of privacy harm from all sides — from cybercriminals to government surveillance programs to data-hungry private actors — have decreased the public's trust in the collection and processing of personal data. Indeed, numerous threats, including errant data collection and use, lack of satisfactory legal and policy solutions and regular emergence of new privacy-invasive technologies, are enhancing consumers' privacy concerns. As time passes, these privacy-related attitudes become entrenched behaviors rooted in distrust of data collection that generate severe and tangible consequences for the global digital economy. With the incorporation of data-driven AI tools into a growing array of business applications — from customer service to marketing to fintech and telehealth — consumer privacy concerns will continue to rise.

Against this global background of growing privacy concerns, the following sections explore the dynamics of trust among consumers for the use of AI in business.

Consumer trust in AI

In general, findings about how much trust consumers put into organizations that use AI technologies are inconclusive. For example, according to a survey published by Forbes Advisor, when asked in general if they would trust a business that uses AI, the majority of respondents, 65%, reported they are either somewhat or very likely to impart their trust. Yet, about one in seven respondents, or 14%, reported they would be either somewhat or very unlikely to trust businesses that use AI. An additional one in five, or 21%, were on the fence, neither likely nor unlikely to trust businesses using AI.

Yet, a Pew Research survey conducted in May 2023 found much higher levels of distrust. Among Americans who had heard of AI, 70% said they had very little or no trust at all in companies to use AI responsibly. About a quarter, or 24%, said they had some or a great deal of trust in them, while about 6% were unsure.

Given the rapidity of AI's technological development and deployment, unsurprisingly, many people have not yet made up their minds about it. Voice recognition data is now being used to improve voice translators, health data from fitness apps is being used for medical research and facial recognition data is being used to grant access to a one's financial information. Still, about one in four U.S. adults express uncertainty over whether these use cases are acceptable.

Indeed, some consumers remain wary of the consequences of AI for privacy. In a 2018 survey conducted by the Brookings Institution, 49% of respondents thought AI would lead to a reduction in privacy. About 12% thought it would have no effect, while 5% believed AI would enhance privacy. But, again, a sizeable number of respondents expressed uncertainty about the future of AI, with 34% saying they did not know whether AI would affect privacy.

Research has made progress in exploring the context- and technology-specific factors that affect whether consumers trust AI with their data. The following sections examine how consumer privacy perceptions regarding the use of AI varies across consumer-centric contexts and domains.

Perceived privacy risks of AI

While general trust in AI remains high, several recent studies on consumer reception of AI tools found their acceptance to be strongly tied to the industry and/or the type of data involved. For example, a 2023 Pew Research study revealed people were more or less split on the acceptability of social media companies using AI to analyze what people do on their sites and deliver personalized content, or for smart speakers using AI to assist in the recognition of the speaker's identity. In contrast, a clear majority of people found it unacceptable for AI to be used to determine a person's eligibility for public assistance. In other words, not all uses of AI are equal in the public's mind.

A similar study found 48-55% of respondents, were comfortable with AI being used to analyze their social media use and engagement, purchasing habits and driving behavior, but smaller portions were comfortable with AI being used to analyze their text messages or phone conversations, at 33% and 21% respectively.

To further explore how privacy perceptions related to AI vary across industries and types of data, the next sections take a deep dive into the dynamics of privacy and trust in AI across personal voice assistants, smart devices, health apps, self-driving cars, online data apps, fintech and in the workplace.

The uptake of personal voice assistants has been more limited in the segment of consumers with greater privacy concerns. According to one study, about 7% of people who do not use voice assistants said privacy is their primary reason for not wanting them. Consumers who were the most adamant in their refusal to adopt an intelligent personal assistant, such as Apple's Siri, Amazon's Alexa or Google's Assistant, were more likely than others to harbor concerns about data privacy and security. Similarly, a poll of digital assistant use in the U.K. found 15% of those who do not own a smart device think digital assistants are "creepy."

Even among consumers who use personal voice assistants, privacy concerns linger. A study by PwC found 40% of the people who use voice assistants still have concerns about what happens to their voice data. Another study showed, upon learning of the "always listening" feature of these devices, privacy concerns increased even among individuals who previously expressed no privacy concerns with these devices at all.

Smart devices in the home have also been a particular source of privacy-related fears and anxiety. A 2021 YouGov survey found that a majority, approximately 60-70%, of U.K. adults believe it is very or fairly likely that their smartphones, smart speakers and other smart home devices are listening to their conversations unprompted. In addition, 22% believe they have been served targeted advertisements due to an eavesdropping smart device.

News reports indicate these fears are not entirely unfounded. Researchers have documented smart speaker misactivations, or instances when a device "awakens" even when a wake word is not spoken. One study observed that, given a stream of conversation in the background, smart speakers misactivate about once per hour, with these durations lasting as long as 10 seconds or more. Moreover, makers of smart devices have been reported to employ "human helpers" to review voice and video recordings in their efforts to improve the software and algorithms that underlie these technologies.

And when it comes to AI-driven Internet of Things devices that do not record audio or video — such as smart lightbulbs and thermostats, which may use machine learning algorithms to infer sensitive information including sleep patterns and home occupancy — users remain mostly unaware of their privacy risks. From using inexpensive laser-pointers to hijack voice assistants to hacking into home security cameras, cybercriminals have also been able to infiltrate homes through the security vulnerabilities in smart devices.

Overall, there is a complicated relationship between use of AI-driven smart devices and privacy, with users sometimes willing to trade privacy for convenience. At the same time, given the relative immaturity of the privacy controls on these devices, users remain stuck in a state of "privacy resignation."

Another emergent AI-based technology that many consumers believe poses a threat to privacy is the self-driving or autonomous vehicle. At their core, these automotive devices require massive amounts of visual and other information for their dozens of cameras and sensors to function properly. Given that AVs engage in continuous recording of their surroundings, law enforcement authorities have also shown interest in the street-level data they collect to aid in criminal investigations.

The main privacy concerns individuals have with AVs include not only their ability to engage in location tracking and surveillance but their diminishment of human autonomy — a core aspect of privacy. The very name autonomous vehicle implies an autonomy outside the purview of humans, and, as researchers Kanwaldeep Kaur and Giselle Rampersad pointed out, "This loss of autonomy extends to the loss of privacy." Indeed, a recent Eurobarometer study found about one in five, or 21%, of Europeans agree automated and connected vehicles will pose a threat to privacy. Moreover, 23% of Europeans believe automated and connected vehicles will become a new target for cyberattacks.

Research has also demonstrated that privacy concerns play a critical role in influencing consumers' trust in and adoption of AVs. Kaur and Rampersad found when consumers had fewer privacy concerns regarding AVs, it a positive effect on their trust in them, which in turn led to greater adoption. Another interesting strand of research into trust of AVs showed that their anthropomorphism, or embodiment of human features within the technology, increases human trust in their competency.

Entrusting an algorithm to predict love is perhaps the ultimate show of faith in technology. But users of dating apps and websites still harbor significant privacy concerns about how their personal data is used. Among the 30% of U.S. adults who have ever used a dating app or site, 57% report being concerned with how much data these services collect about them, according to Pew Research. These concerns are not unfounded: A premise of online dating is that one should share significant amounts of intimate personal information with strangers to form a desired connection. Indeed, the privacy paradox — whereby one's privacy preferences appear out-of-sync with one's data sharing behaviors — is perhaps most salient in the online dating context.

More importantly, the populations that are more likely to try online dating — 53% of 18-29 year olds and 51% of LGBT adults, as of 2022 — may disproportionately be exposed to their privacy risks. Given the nature of the type of sensitive information these sites and apps collect, i.e., "swipe history," sexual orientation data, explicit photos, and even HIV status and most recent testing date, users can be at risk of "blackmail, doxing, financial loss, identity theft, emotional or reputational damage, revenge porn, stalking, or more." Research has also shown older adults, women, those who have had negative experiences with online dating and those who believe it has a mostly negative impact on dating and relationships are more likely to be concerned about data collection on dating websites and apps.

Perhaps no applications of AI are as consequential as those within the health care domain. Health apps and telehealth have transformed consumers' experiences with health care systems and with their own health data. Yet, the digitization of health care delivery has brought about new privacy risks and data-related anxieties for patients. In a 2021 AP poll, 18% of respondents said they were very or somewhat concerned about a lack of privacy when receiving health care at home through telehealth services — and this concern was greatest among nonwhite adults and adults without a college degree. Indeed, more than half, or 52%, of telehealth providers have seen cases where a patient has refused a video call with medical staff due to privacy and data security concerns.

While working from home, especially during the onset of the COVID-19 pandemic, clinical staff have also expressed their own reservations about their ability to maintain the privacy and confidentiality of patient data revealed during telehealth visits. A Kaspersky study found only about three in ten global health care providers are very confident in their ability to prevent cyberattacks, while four in ten said clinicians lack necessary insight into how patient data is protected in telehealth settings.

Other home telehealth devices, such as sensors designed to detect falls, may collect additional data on activities occurring within the household, including times of home occupancy. More recently, menstruation-tracking apps, which have user bases in the hundreds of millions, have aroused health-related privacy concerns globally. A report by Privacy International demonstrated how these apps automatically share "extensive and deeply personal sensitive data with third parties, including Facebook."

At the same time, a desire to improve health outcomes often outweighs consideration of other risks, including privacy-related ones. For example, compared to healthy individuals, patients with chronic conditions tend to be less concerned with technology use in health care management and privacy. One study found "for most people, the convenience of rapid access to information and communication with clinicians outweighed privacy concerns." A study of 20 mature adults who used smart speakers and mobile health apps found that, although they feel a need to protect their data privacy, "they accept the risk of misuse of their private data when using the technology." Similarly, researchers in Germany have shown that when algorithms are used in health care decision-making, individuals are "willing to trade transparency … for small gains in effectiveness." That is, individuals are unlikely to demand accountability from algorithms when efficiency gains can be had, even in sensitive areas such as public health.

In the world of finance, privacy risks are tangible. The resultant harms are not reputational or emotional but monetary. This explains why global regulators have been paying particular attention to the risks of unfair or discriminatory decision-making resulting from the use of AI systems that can affect individuals' eligibility for certain financial services and benefits.

Financial data portability, sharing sensitive personal data with third parties, data broker requirements and use of AI chatbots by financial services entities are a few more financial privacy-related issues that have been on regulators' radar. Indeed, the Biden-Harris administration listed financial services as one of the "critical fields" in its Executive Order 14110 on AI.

The stakes of financial data protection may explain why consumers find themselves in somewhat of a state of "cognitive dissonance" when it comes to their general attitudes toward the use of AI in banking. In a U.K. focus group study, participants were both eager to use AI-driven financial services for their perceived convenience and benefits, while simultaneously feeling dislike, distrust or concern about those services and their negative impact on society. Given that nearly seven in ten finance organizations are using or planning to use AI, consumer attitudes about privacy and AI will remain critically important in this space.

From worries about jobs being replaced by AI to the complete absence of privacy at work, AI is also having a significant effect on the workplace. The American Psychological Association's 2023 Work in America Survey found that concerns about AI and monitoring technologies in the workplace are already having a negative impact on workers' psychological and emotional well-being and productivity. Those who are worried about AI making some or all job duties obsolete are much more likely to feel stressed, burnt out, less productive, unmotivated, emotionally exhausted and ineffective at work. Worries about AI, which are held by about 38% of workers overall, and even higher percentages of younger workers between the ages of 18-25 and 26-43, less educated workers and people of color, are also associated with higher intentions to look for a new job.

This cross-sectoral analysis shows that consumers expect AI to impact everything from how we behave in the "privacy of our own homes" to how we get from point A to point B, from finding love to improving one's health and finances and from emotional well-being to productivity at work. Indeed, so much is at stake for consumers as AI becomes more embedded into our daily lives.

Key takeaways

Unpacking consumers' attitudes toward privacy and AI is complicated, and they defy simple classification. Yet, some key takeaways can be derived from an analysis of recent studies into consumer perceptions at the intersection of privacy and AI:

Most consumers are excited about the benefits AI offers in terms of efficiency, particularly in the delivery of crucial services such as health care and finance.

Simultaneously, most consumers are concerned about the risks AI presents in general, as well as about the risks to privacy in particular.

Also, anywhere from one quarter to one third of consumers remain uncertain or undecided on whether AI will affect their privacy.

Overall, then, consumers have mixed feelings about the privacy risks of AI-driven technologies. All at once, they are excited by their promise, concerned about their risks and uncertain about what AI means for their autonomy and privacy. Importantly, for many consumers, the jury is still out on AI. This substantial segment of uncertain consumers presents an opportunity for organizations to be transparent and provide clearer, more relevant information to all consumers, most of whom would like a better picture of how their personal data is being used and how their privacy will be affected by AI.

It is all but certain that consumers' privacy concerns are on the rise and that AI is becoming one of the main forces driving them. Greater awareness of consumer perceptions at the intersection of privacy and AI fills an important gap in the understanding of what consumers think about a rapidly advancing technology that is disrupting an already fast-moving, data-fueled global economy. Ultimately, organizations that develop and build AI into their products should account for how consumers perceive risks to their privacy, which will foster trust in and enhance the uptake of these new technologies.

This content is eligible for Continuing Professional Education credits. Please self-submit according to CPE policy guidelines.

Tags:

Related resources

US State Comprehensive Privacy Laws Report 2025

AdTech 2024: A Look Back

Privacy Governance Report 2024