AI Governance in Practice Report 2024

This report by the IAPP and FTI Consulting aims to inform AI governance professionals of the most significant challenges to be aware of when building and maturing an AI governance program.

Published: 3 June 2024

Recent and rapidly advancing breakthroughs in machine learning technology have forever transformed the landscape of AI.

AI systems have become powerful engines capable of autonomous learning across vast swaths of information and generating entirely new data. As a result, society is in the midst of significant disruption with the surge in AI sophistication and the emergence of a new era of technological innovation.

As businesses grapple with a future in which the boundaries of AI only continue to expand, their leaders face the responsibility of managing the various risks and harms of AI, so its benefits can be realized in a safe and responsible manner.

Critically, these benefits are accompanied by serious considerations and concerns about the safety of this technology and the potential for it to disrupt the world and negatively impact individuals when left unchecked. Confusion about how the technology works, the introduction and proliferation of bias in algorithms, dissemination of misinformation, and privacy rights violations represent only a sliver of the potential risks.

The practice of AI governance is designed to tackle these issues. It encompasses the growing combination of principles, laws, policies, processes, standards, frameworks, industry best practices and other tools incorporated across the design, development, deployment and use of AI.

While relatively new, the field of AI governance is maturing, with government authorities around the world beginning to develop targeted regulatory requirements and governance experts supporting the creation of accepted principles, such as the Organisation for Economic Co-Operation and Development's AI Principles, emerging best practices and tools for various uses of AI in different domains.

There are many challenges and potential solutions for AI governance, each with unique proximity and significance based on an organization's role, footprint, broader risk- governance profile and maturity. This report aims to inform the growing, increasingly empowered and increasingly important community of AI governance professionals about the most common and significant challenges to be aware of when building and maturing an AI governance program. It offers actionable, real-world insights into applicable law and policy, a variety of governance approaches, and tools used to manage risk. Indeed, some of the challenges to AI governance overlap and run through a range of themes. Therefore, an emerging solution for one thematic challenge may also be leveraged for another. Conversely, in certain circumstances, specific challenges and associated solutions may conflict and require reconciliation with other approaches. Some of these potential overlaps and conflicts have been identified throughout the report.

Questions about whether and when organizations should prioritize AI governance are being answered: "yes" and "now," respectively. This report is, therefore, focused on how organizations can approach, build and leverage AI governance in the context of the increasingly voluminous and complex applicable landscape.

Given the complexity and transformative nature of AI, significant work has been done by law and policymakers on what is now a vast and growing body of principles, laws, policies, frameworks, declarations, voluntary commitments, standards and emerging best practices that can be challenging to navigate. Many of these various sources interact with each other, either directly or by virtue of the issues covered.

The following are examples of some of the most prominent and consequential AI governance efforts:

- OECD AI Principles

- European Commission's Ethics Guidelines for Trustworthy AI

- UNESCO Recommendation on the Ethics of AI

- The White House Blueprint for an AI Bill of Rights

- G7 Hiroshima Principles

- EU AI Act

- EU Product Liability Directive, proposed

- EU General Data Protection Regulation

- Canada – AI and Data Act, proposed

- U.S. AI Executive Order 14110

- Sectoral U.S. legislation for employment, housing and consumer finance

- U.S. state laws, such as Colorado AI Act, Senate Bill 24-205

- China's Interim Measures for the Management of Generative AI Services

- The United Arab Emirates Amendment to Regulation 10 to include new rules on Processing Personal Data through Autonomous and Semi-autonomous Systems

- Digital India Act

- OECD Framework for the classification of AI Systems

- NIST AI RMF

- NIST Special Publication 1270: Towards a Standard for Identifying and Managing Bias in AI

- Singapore AI Verify

- The Council of Europe's Human Rights, Democracy, and the Rule of Law Assurance Framework for AI systems

- Bletchley Declaration

- The Biden-Harris Administration's voluntary commitments from leading AI companies

- Canada's guide on the use of generative AI

- ISO/IEC JTC 1 SC 42

- The Institute of Electrical and Electronics Engineers Standards Association P7000

- The European Committee for Electrotechnical Standardization AI standards for EU AI Act

- The VDE Association's AI Quality and Testing Hub

- The British Standards Institution and Alan Turing Institute AI Standards Hub

- Canada's AI and Data Standards Collaborative

With private investment, global adoption rates and regulatory activity on the rise, as well as the growing maturity of the technology, AI is increasingly becoming a strategic priority for organizations and governments worldwide. Organizations of all sizes and industries are increasingly engaging with AI systems at various stages of the technology product supply chain.

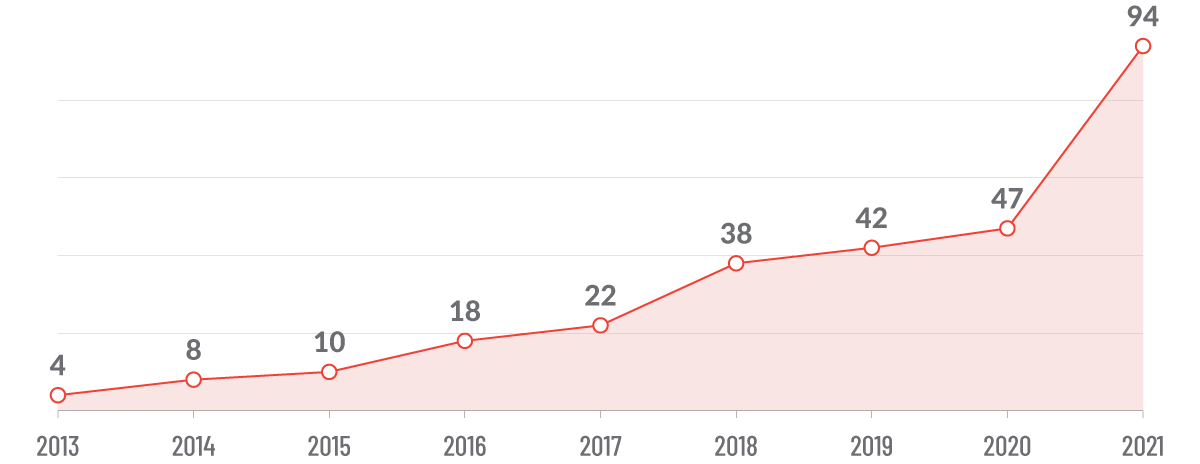

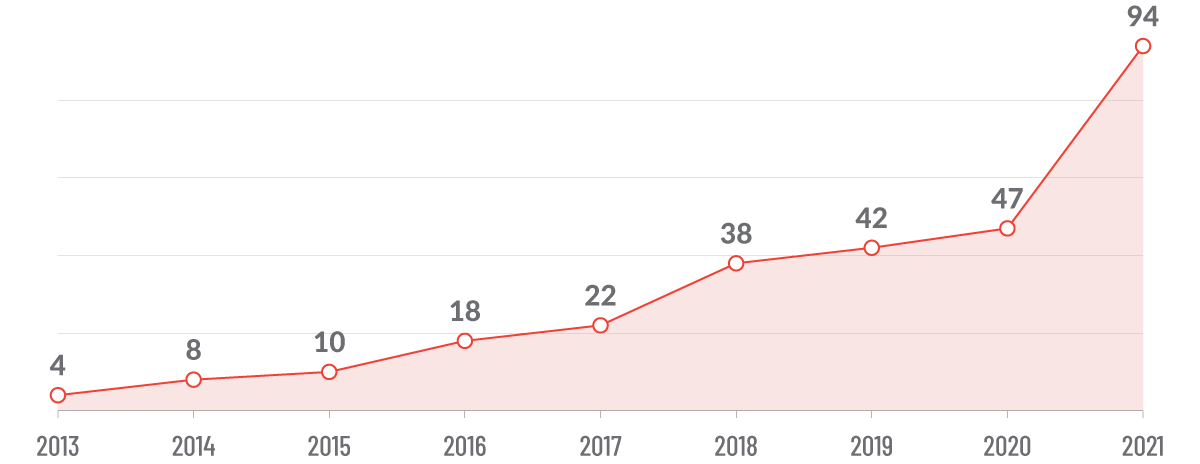

Global AI private investment

(USD billion, 2021)

AI Risks

- Individuals and society: Risk of bias or other detrimental impact on individuals.

- Legal and regulatory: Risk of noncompliance with legal and contractual obligations.

- Financial: Risk of financial implications, e.g., fines, legal or operational costs, or lost profit.

- Reputational: Risk of damage to reputation and market competitiveness.

The AI life cycle

- Plan and document the system's concept and objectives.

- Plan for legal and regulatory compliance.

- Gather data and check for data quality.

- Document and assess metadata and characteristics of the dataset.

- Consider legal and regulatory requirements.

- Select the algorithm.

- Train the model.

- Carry out testing, validation and verification.

- Calibrate.

- Carry out input interpretation.

- Pilot and perform compatibility checks.

- Verify legal and regulatory compliance.

- Monitor performance and mitigate risks post deployment.

Organizations may seek to leverage existing organizational risk frameworks to tackle AI risk at enterprise, product and operational levels. Tailoring their approach to AI governance to their specific AI product risks, business needs and broader strategic objectives can help organizations establish the building blocks of trustworthy and responsible AI. A key goal of the AI governance program is to facilitate responsible innovation. Flexibly adapting existing governance processes can help businesses to move forward with exploring the disruptive competitive opportunities that AI technologies present, while minimizing associated financial, operational and reputational risks.

This content is eligible for Continuing Professional Education credits. Please self-submit according to CPE policy guidelines.

Contributors:

Ashley Casovan

Managing Director, AI Governance Center

IAPP

Joe Jones

Research and Insights Director

IAPP

Uzma Chaudhry

CIPP/E

Former AI Governance Center Fellow

ATI

Tags:

AI Governance in Practice Report 2024

This report by the IAPP and FTI Consulting aims to inform AI governance professionals of the most significant challenges to be aware of when building and maturing an AI governance program.

Published: 3 June 2024

Contributors:

Ashley Casovan

Managing Director, AI Governance Center

IAPP

Joe Jones

Research and Insights Director

IAPP

Uzma Chaudhry

CIPP/E

Former AI Governance Center Fellow

ATI

Recent and rapidly advancing breakthroughs in machine learning technology have forever transformed the landscape of AI.

AI systems have become powerful engines capable of autonomous learning across vast swaths of information and generating entirely new data. As a result, society is in the midst of significant disruption with the surge in AI sophistication and the emergence of a new era of technological innovation.

As businesses grapple with a future in which the boundaries of AI only continue to expand, their leaders face the responsibility of managing the various risks and harms of AI, so its benefits can be realized in a safe and responsible manner.

Critically, these benefits are accompanied by serious considerations and concerns about the safety of this technology and the potential for it to disrupt the world and negatively impact individuals when left unchecked. Confusion about how the technology works, the introduction and proliferation of bias in algorithms, dissemination of misinformation, and privacy rights violations represent only a sliver of the potential risks.

The practice of AI governance is designed to tackle these issues. It encompasses the growing combination of principles, laws, policies, processes, standards, frameworks, industry best practices and other tools incorporated across the design, development, deployment and use of AI.

While relatively new, the field of AI governance is maturing, with government authorities around the world beginning to develop targeted regulatory requirements and governance experts supporting the creation of accepted principles, such as the Organisation for Economic Co-Operation and Development's AI Principles, emerging best practices and tools for various uses of AI in different domains.

There are many challenges and potential solutions for AI governance, each with unique proximity and significance based on an organization's role, footprint, broader risk- governance profile and maturity. This report aims to inform the growing, increasingly empowered and increasingly important community of AI governance professionals about the most common and significant challenges to be aware of when building and maturing an AI governance program. It offers actionable, real-world insights into applicable law and policy, a variety of governance approaches, and tools used to manage risk. Indeed, some of the challenges to AI governance overlap and run through a range of themes. Therefore, an emerging solution for one thematic challenge may also be leveraged for another. Conversely, in certain circumstances, specific challenges and associated solutions may conflict and require reconciliation with other approaches. Some of these potential overlaps and conflicts have been identified throughout the report.

Questions about whether and when organizations should prioritize AI governance are being answered: "yes" and "now," respectively. This report is, therefore, focused on how organizations can approach, build and leverage AI governance in the context of the increasingly voluminous and complex applicable landscape.

Given the complexity and transformative nature of AI, significant work has been done by law and policymakers on what is now a vast and growing body of principles, laws, policies, frameworks, declarations, voluntary commitments, standards and emerging best practices that can be challenging to navigate. Many of these various sources interact with each other, either directly or by virtue of the issues covered.

The following are examples of some of the most prominent and consequential AI governance efforts:

- OECD AI Principles

- European Commission's Ethics Guidelines for Trustworthy AI

- UNESCO Recommendation on the Ethics of AI

- The White House Blueprint for an AI Bill of Rights

- G7 Hiroshima Principles

- EU AI Act

- EU Product Liability Directive, proposed

- EU General Data Protection Regulation

- Canada – AI and Data Act, proposed

- U.S. AI Executive Order 14110

- Sectoral U.S. legislation for employment, housing and consumer finance

- U.S. state laws, such as Colorado AI Act, Senate Bill 24-205

- China's Interim Measures for the Management of Generative AI Services

- The United Arab Emirates Amendment to Regulation 10 to include new rules on Processing Personal Data through Autonomous and Semi-autonomous Systems

- Digital India Act

- OECD Framework for the classification of AI Systems

- NIST AI RMF

- NIST Special Publication 1270: Towards a Standard for Identifying and Managing Bias in AI

- Singapore AI Verify

- The Council of Europe's Human Rights, Democracy, and the Rule of Law Assurance Framework for AI systems

- Bletchley Declaration

- The Biden-Harris Administration's voluntary commitments from leading AI companies

- Canada's guide on the use of generative AI

- ISO/IEC JTC 1 SC 42

- The Institute of Electrical and Electronics Engineers Standards Association P7000

- The European Committee for Electrotechnical Standardization AI standards for EU AI Act

- The VDE Association's AI Quality and Testing Hub

- The British Standards Institution and Alan Turing Institute AI Standards Hub

- Canada's AI and Data Standards Collaborative

With private investment, global adoption rates and regulatory activity on the rise, as well as the growing maturity of the technology, AI is increasingly becoming a strategic priority for organizations and governments worldwide. Organizations of all sizes and industries are increasingly engaging with AI systems at various stages of the technology product supply chain.

Global AI private investment

(USD billion, 2021)

AI Risks

- Individuals and society: Risk of bias or other detrimental impact on individuals.

- Legal and regulatory: Risk of noncompliance with legal and contractual obligations.

- Financial: Risk of financial implications, e.g., fines, legal or operational costs, or lost profit.

- Reputational: Risk of damage to reputation and market competitiveness.

The AI life cycle

- Plan and document the system's concept and objectives.

- Plan for legal and regulatory compliance.

- Gather data and check for data quality.

- Document and assess metadata and characteristics of the dataset.

- Consider legal and regulatory requirements.

- Select the algorithm.

- Train the model.

- Carry out testing, validation and verification.

- Calibrate.

- Carry out input interpretation.

- Pilot and perform compatibility checks.

- Verify legal and regulatory compliance.

- Monitor performance and mitigate risks post deployment.

Organizations may seek to leverage existing organizational risk frameworks to tackle AI risk at enterprise, product and operational levels. Tailoring their approach to AI governance to their specific AI product risks, business needs and broader strategic objectives can help organizations establish the building blocks of trustworthy and responsible AI. A key goal of the AI governance program is to facilitate responsible innovation. Flexibly adapting existing governance processes can help businesses to move forward with exploring the disruptive competitive opportunities that AI technologies present, while minimizing associated financial, operational and reputational risks.

This content is eligible for Continuing Professional Education credits. Please self-submit according to CPE policy guidelines.

Tags:

Related resources

US State Privacy Legislation Tracker

Global AI Law and Policy Tracker

IAPP Global Legislative Predictions 2026