AI Governance Profession Report 2025

This report, published by the IAPP and Credo AI, provides insights on building an AI governance program and professionalizing AI governance.

Published: 16 April 2025

AI governance is proving its value to organizations.

The promulgation of artificial intelligence governance legislation, regulations and standards combined with increasingly complex and demanding sociotechnical pressures have organizations prioritizing the building and implementation of AI governance programs.

This report, and the data within it, profiles the extent to which organizations are implementing AI governance programs, and how they are doing so. Indeed, survey data shows how the development and deployment of AI by organizations very often goes hand in hand with AI governance.

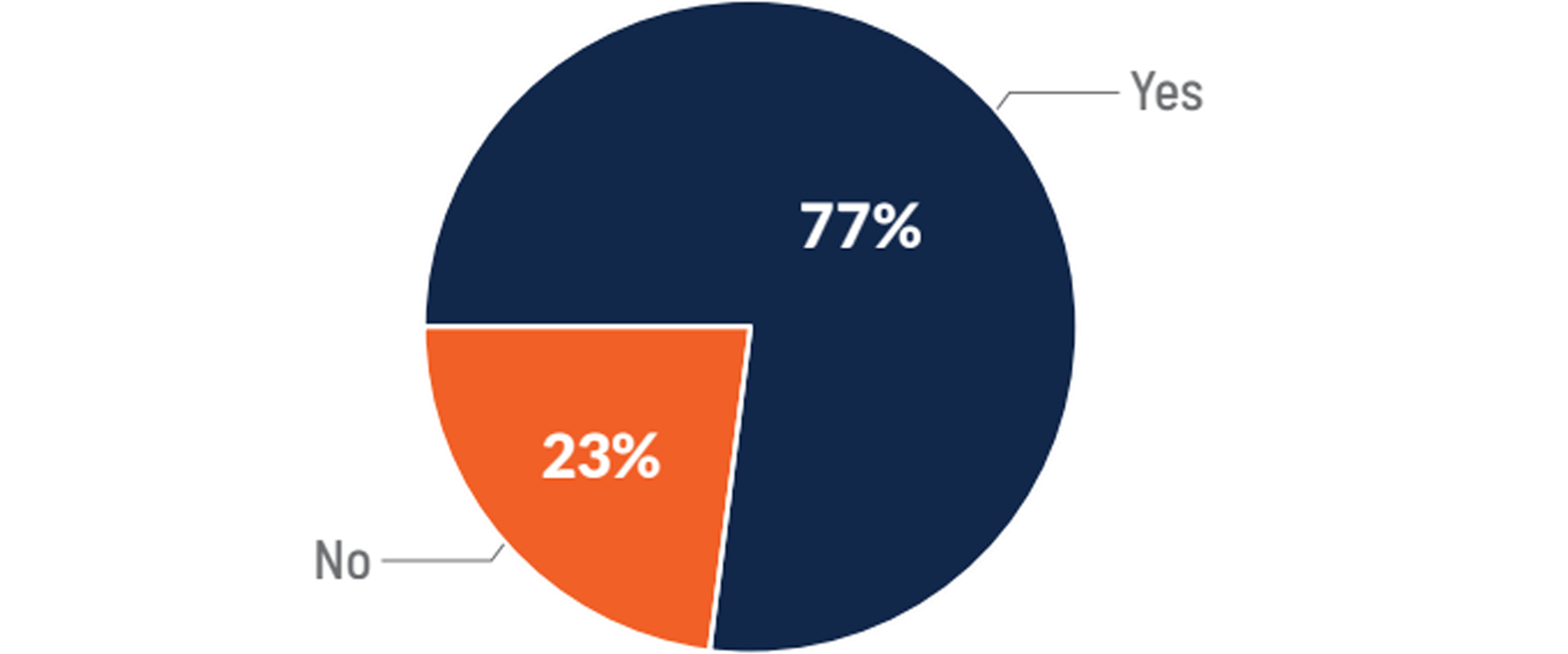

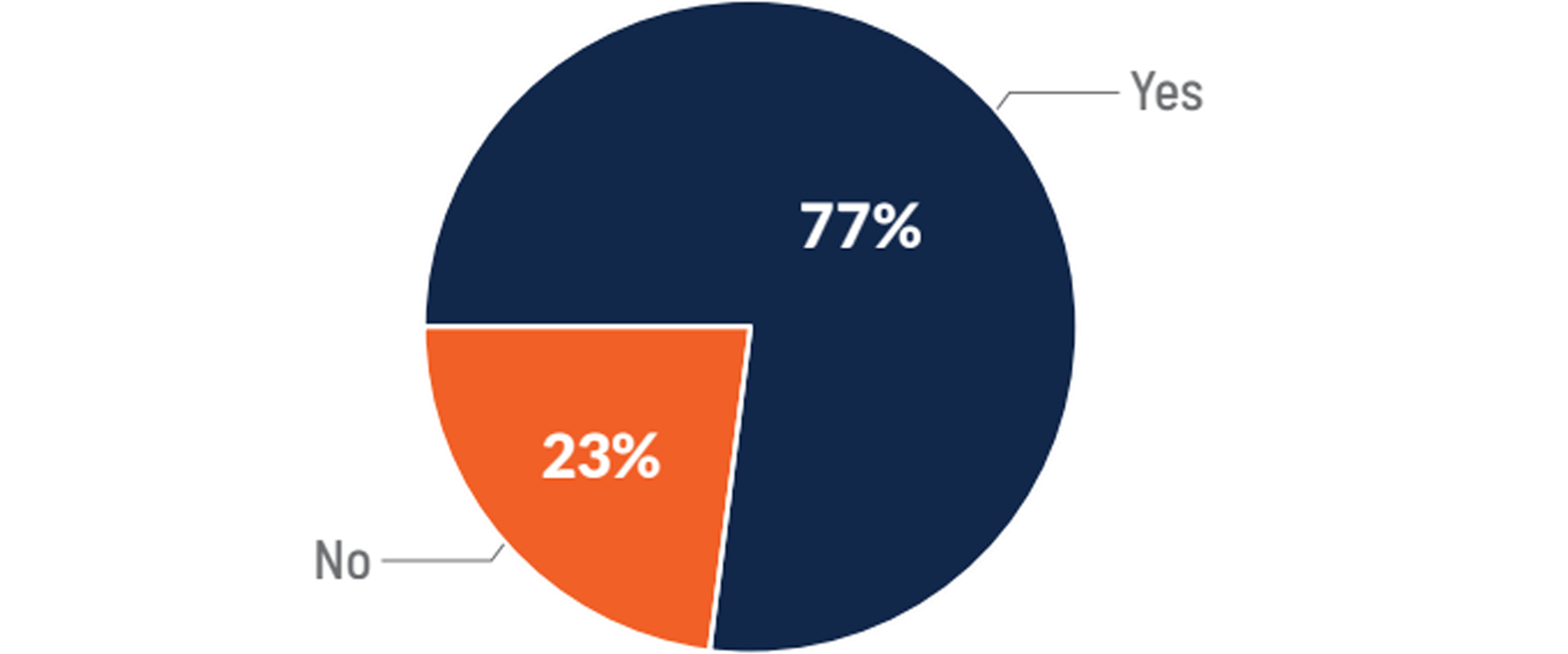

Of surveyed organizations, 77% are currently working on AI governance, with a jump to near 90% for those organizations already using AI. Importantly, 30% of organizations not yet using AI reported working on AI governance, perhaps revealing a prevailing "governance first" prioritization of ensuring good governance is in place before AI use. This is supported by some of the case studies, which indicate organizations are implementing formal AI governance programs after using AI for smaller use cases but before embracing AI as a strategic imperative.

Starting an AI governance program involves hiring new or dedicating existing employees to an AI governance team. Companies are building these teams incrementally, starting with tasking the existing workforce and then hiring and empowering senior managers and executives, which the data suggests leads to fewer issues using AI and reporting on AI governance, among other positive outcomes. Many of the case studies illustrate how newer AI governance programs hire managers with prior experience in a digital governance discipline, like privacy.

Is your organization currently working on AI governance?

A significant challenge identified by respondents was access to appropriate AI governance talent and skills in the workforce. Of respondents, 23.5% said finding qualified AI professionals was part of the challenge delivering AI. Another part of the challenge is the host of skills qualified AI governance professionals need. They should, of course, understand AI but also have experience in governance, risk and compliance, and they should be able to translate legislative requirements into actionable policies, for example. While larger companies can split these tasks into several roles, smaller companies will look for AI governance professionals who can cover all these areas. Respondents indicated AI governance skills will continue to evolve alongside the development of new types of AI technologies and policies. Certain skills, such as red teaming, will be increasingly necessary.

There is no clear best practice for how to build and organize an AI governance team, including the location of those directly responsible for AI governance, for example as a separate team or integrated into a broader team responsible for other digital portfolios. From an organizational structure perspective, the data shows 50% of AI governance professionals are typically assigned to ethics, compliance, privacy or legal teams. Many organizations with mature AI governance programs are drawing in specialists from several departments, regardless of the main AI governance function. Over 50% of respondents indicated the following disciplines would gain additional responsibility: privacy, IT, security, and legal and compliance.

Disciplines already involved in digital responsibility are relevant as either the main function or as a collaborating function in AI governance. Organizations often share joint responsibility with privacy and other disciplines, such as cybersecurity or data governance. Respondents indicated AI governance professionals come from different disciplines and areas of expertise, and a strong number of privacy professionals are continually asked to take on AI governance roles.

Top functions tasked with the primary responsibility for AI governance

- 22% – Privacy

- 22% – Legal and compliance

- 17% – IT

- 10% – Data governance

- 6% – Ethics and compliance

- 5% – Security

Organizations building their AI governance programs out of or in conjunction with their privacy programs are likely to adapt those structures, processes and tools. One common example is the AI risk impact assessment process, in which relevant questions about AI are added to existing privacy governance documentation and processes.

AI governance is still evolving, and mature AI governance programs continue to find room to innovate. As new guidance and compliance burdens emerge, alongside new and greater commercial opportunities associated with good governance, a mature AI governance program will look different in 2025 or 2026 than it did in 2024 at the same organization. Nevertheless, patterns have already emerged, especially related to the integrated nature of diverse digital teams, what governance looks like at different-sized organizations and each organization's approach for AI governance.

Key Takeaways

Organizations are building the foundation for compliance and strategy implementation.

Organizations using AI, which can broadly capture most analytical technologies depending on the definition, are increasingly looking to understand their compliance obligations. Many organizations use AI governance to build out a compliance program while also steering AI use toward strategic aims — from reducing headcount to increasing market competitiveness. For almost half of the respondents, AI governance was a top-five strategic priority.

Organizations see hiring AI governance professionals as an important part of professionalizing an AI governance program.

Few organizations are satisfied with their level of AI governance staffing, with only 10 out of 671, or 1.5%, reporting they will not need additional staff in the next 12 months.

Choosing the right leaders and functions to lead AI governance sets the tone for an AI governance program.

Who leads the efforts in the organization has a noticeable impact on AI governance efforts and is often correlated with different markers of AI governance maturity. When the primary responsibility for AI governance is with their organization's privacy function, respondents were significantly more likely to be confident in their ability to comply with the AI Act, at 67%.

Organizations are thinking seriously about how to best approach AI governance.

This report shows there is no one single path; each organization will need to consider its objectives and unique situation when deciding how to develop its AI governance program. While organizations can leverage their existing privacy and compliance functions to support AI governance, AI introduces unique risks that require collaboration across functions.

Research approach

Two data sources were employed in the making of this report. In the spring of 2024, the IAPP conducted its annual governance survey. The survey contained broad demographic questions, such as the size and revenue of the organization, as well as 25 questions related to AI governance. More than 670 individuals from 45 countries and territories responded. In the survey, a few questions revealed if the respondents are confident in their approach to AI governance: "Are you confident in your compliance with the EU AI Act?" and "Is your AI governance budget adequate?" Other questions were more basic: "Are you actively working on AI governance?" and "What are your challenges in reporting on or using AI?" Through these questions, we teased out subjective understanding of the maturity of an AI governance program. Some questions, like if the organization has an AI governance committee, are a sign of a mature AI governance program for a larger company but might be irrelevant for a smaller company.

To complement the survey data, seven private companies with headquarters spread across North America and Europe and with business and governance practices distributed across the globe provided case studies to highlight examples of AI governance programs. The case studies describe how organizations make decisions relating to their AI governance programs, including the team's composition, their cross-functional cooperation and the tools employed. These case studies complement the insights gained from the survey data by providing individual and specific context to provide insight into the decisions made in each AI governance program. The variety of companies and their respective use of AI mirrors the diversity of approaches to governing AI, while highlighting commonalities among similar institutions.

The survey data can be used to group organizations by type and size, as well as by which AI technologies an organization uses and for what purpose.

This content is eligible for Continuing Professional Education credits. Please self-submit according to CPE policy guidelines.

Contributors:

Richard Sentinella

Former AI Governance Research Fellow

IAPP

Evi Fuelle

Director, Global Policy

Credo AI

Ashley Casovan

Managing Director, AI Governance Center

IAPP

Joe Jones

Research and Insights Director

IAPP

Tags:

AI Governance Profession Report 2025

This report, published by the IAPP and Credo AI, provides insights on building an AI governance program and professionalizing AI governance.

Published: 16 April 2025

Contributors:

Richard Sentinella

Former AI Governance Research Fellow

IAPP

Evi Fuelle

Director, Global Policy

Credo AI

Ashley Casovan

Managing Director, AI Governance Center

IAPP

Joe Jones

Research and Insights Director

IAPP

AI governance is proving its value to organizations.

The promulgation of artificial intelligence governance legislation, regulations and standards combined with increasingly complex and demanding sociotechnical pressures have organizations prioritizing the building and implementation of AI governance programs.

This report, and the data within it, profiles the extent to which organizations are implementing AI governance programs, and how they are doing so. Indeed, survey data shows how the development and deployment of AI by organizations very often goes hand in hand with AI governance.

Of surveyed organizations, 77% are currently working on AI governance, with a jump to near 90% for those organizations already using AI. Importantly, 30% of organizations not yet using AI reported working on AI governance, perhaps revealing a prevailing "governance first" prioritization of ensuring good governance is in place before AI use. This is supported by some of the case studies, which indicate organizations are implementing formal AI governance programs after using AI for smaller use cases but before embracing AI as a strategic imperative.

Starting an AI governance program involves hiring new or dedicating existing employees to an AI governance team. Companies are building these teams incrementally, starting with tasking the existing workforce and then hiring and empowering senior managers and executives, which the data suggests leads to fewer issues using AI and reporting on AI governance, among other positive outcomes. Many of the case studies illustrate how newer AI governance programs hire managers with prior experience in a digital governance discipline, like privacy.

Is your organization currently working on AI governance?

A significant challenge identified by respondents was access to appropriate AI governance talent and skills in the workforce. Of respondents, 23.5% said finding qualified AI professionals was part of the challenge delivering AI. Another part of the challenge is the host of skills qualified AI governance professionals need. They should, of course, understand AI but also have experience in governance, risk and compliance, and they should be able to translate legislative requirements into actionable policies, for example. While larger companies can split these tasks into several roles, smaller companies will look for AI governance professionals who can cover all these areas. Respondents indicated AI governance skills will continue to evolve alongside the development of new types of AI technologies and policies. Certain skills, such as red teaming, will be increasingly necessary.

There is no clear best practice for how to build and organize an AI governance team, including the location of those directly responsible for AI governance, for example as a separate team or integrated into a broader team responsible for other digital portfolios. From an organizational structure perspective, the data shows 50% of AI governance professionals are typically assigned to ethics, compliance, privacy or legal teams. Many organizations with mature AI governance programs are drawing in specialists from several departments, regardless of the main AI governance function. Over 50% of respondents indicated the following disciplines would gain additional responsibility: privacy, IT, security, and legal and compliance.

Disciplines already involved in digital responsibility are relevant as either the main function or as a collaborating function in AI governance. Organizations often share joint responsibility with privacy and other disciplines, such as cybersecurity or data governance. Respondents indicated AI governance professionals come from different disciplines and areas of expertise, and a strong number of privacy professionals are continually asked to take on AI governance roles.

Top functions tasked with the primary responsibility for AI governance

- 22% – Privacy

- 22% – Legal and compliance

- 17% – IT

- 10% – Data governance

- 6% – Ethics and compliance

- 5% – Security

Organizations building their AI governance programs out of or in conjunction with their privacy programs are likely to adapt those structures, processes and tools. One common example is the AI risk impact assessment process, in which relevant questions about AI are added to existing privacy governance documentation and processes.

AI governance is still evolving, and mature AI governance programs continue to find room to innovate. As new guidance and compliance burdens emerge, alongside new and greater commercial opportunities associated with good governance, a mature AI governance program will look different in 2025 or 2026 than it did in 2024 at the same organization. Nevertheless, patterns have already emerged, especially related to the integrated nature of diverse digital teams, what governance looks like at different-sized organizations and each organization's approach for AI governance.

Key Takeaways

Organizations are building the foundation for compliance and strategy implementation.

Organizations using AI, which can broadly capture most analytical technologies depending on the definition, are increasingly looking to understand their compliance obligations. Many organizations use AI governance to build out a compliance program while also steering AI use toward strategic aims — from reducing headcount to increasing market competitiveness. For almost half of the respondents, AI governance was a top-five strategic priority.

Organizations see hiring AI governance professionals as an important part of professionalizing an AI governance program.

Few organizations are satisfied with their level of AI governance staffing, with only 10 out of 671, or 1.5%, reporting they will not need additional staff in the next 12 months.

Choosing the right leaders and functions to lead AI governance sets the tone for an AI governance program.

Who leads the efforts in the organization has a noticeable impact on AI governance efforts and is often correlated with different markers of AI governance maturity. When the primary responsibility for AI governance is with their organization's privacy function, respondents were significantly more likely to be confident in their ability to comply with the AI Act, at 67%.

Organizations are thinking seriously about how to best approach AI governance.

This report shows there is no one single path; each organization will need to consider its objectives and unique situation when deciding how to develop its AI governance program. While organizations can leverage their existing privacy and compliance functions to support AI governance, AI introduces unique risks that require collaboration across functions.

Research approach

Two data sources were employed in the making of this report. In the spring of 2024, the IAPP conducted its annual governance survey. The survey contained broad demographic questions, such as the size and revenue of the organization, as well as 25 questions related to AI governance. More than 670 individuals from 45 countries and territories responded. In the survey, a few questions revealed if the respondents are confident in their approach to AI governance: "Are you confident in your compliance with the EU AI Act?" and "Is your AI governance budget adequate?" Other questions were more basic: "Are you actively working on AI governance?" and "What are your challenges in reporting on or using AI?" Through these questions, we teased out subjective understanding of the maturity of an AI governance program. Some questions, like if the organization has an AI governance committee, are a sign of a mature AI governance program for a larger company but might be irrelevant for a smaller company.

To complement the survey data, seven private companies with headquarters spread across North America and Europe and with business and governance practices distributed across the globe provided case studies to highlight examples of AI governance programs. The case studies describe how organizations make decisions relating to their AI governance programs, including the team's composition, their cross-functional cooperation and the tools employed. These case studies complement the insights gained from the survey data by providing individual and specific context to provide insight into the decisions made in each AI governance program. The variety of companies and their respective use of AI mirrors the diversity of approaches to governing AI, while highlighting commonalities among similar institutions.

The survey data can be used to group organizations by type and size, as well as by which AI technologies an organization uses and for what purpose.

This content is eligible for Continuing Professional Education credits. Please self-submit according to CPE policy guidelines.

Tags:

Related resources

Global AI Law and Policy Tracker

IAPP Global Legislative Predictions 2026

EU AI Act Regulatory Directory