Editor's note: The IAPP is policy neutral. We publish contributed opinion and analysis pieces to enable our members to hear a broad spectrum of views in our domains.

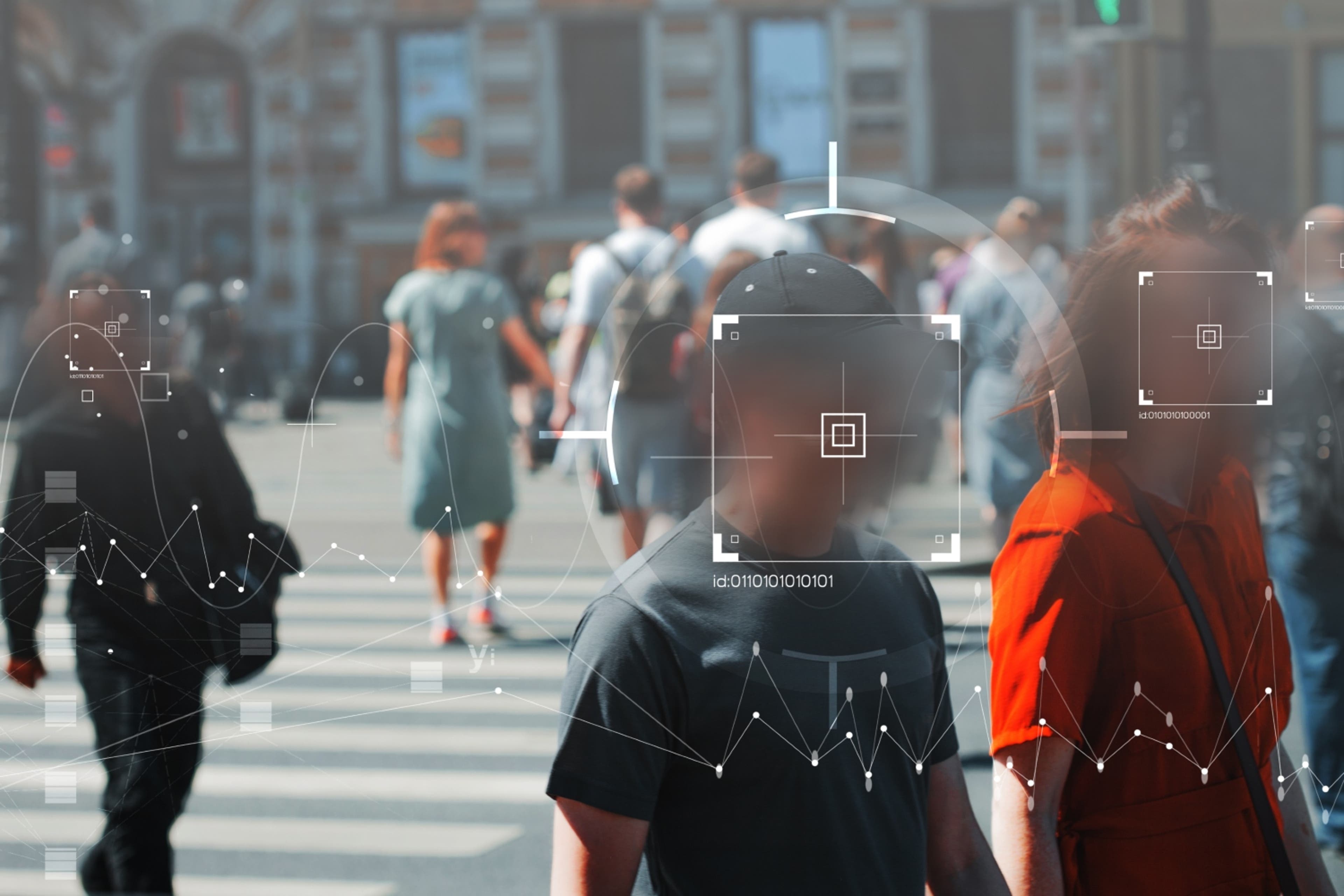

Facial recognition technology is rapidly transforming how Canadians navigate both public and private spaces. From airports and retail environments to policing and government services, facial recognition is being deployed to verify identities, streamline processes and enhance security.

But despite its growing presence, the technology has outpaced Canada's current legal and regulatory frameworks. Without improved oversight, facial recognition risks sacrificing key civil liberties, including privacy, equality and the freedom to participate in public life without surveillance.

While facial recognition offers efficiencies and convenience, it also introduces serious risks: mass surveillance, systemic discrimination, lack of transparency and data breaches. Canada can responsibly regulate facial recognition by embedding strong privacy protections, increasing public trust and ensuring innovation aligns with constitutional rights.

Behind the facial scan

Facial recognition works by analyzing facial features to create a biometric template. These systems can either verify an individual's identity — one-to-one verification — or recognize unknown individuals — one-to-many identification. In Canada, police forces, airport authorities, casinos and shopping malls have all tested or implemented facial recognition to varying degrees.

At border crossings, the technology offers smoother, faster clearance. Countries like Australia and the U.S. have already deployed facial recognition to streamline customs processing and enhance border security. Meanwhile, in the retail sector, malls like those operated by Cadillac Fairview embedded facial recognition in digital kiosks to gather demographic insights, often without consent.

These tools may improve operational efficiency, but they also expose users to significant privacy risks. Biometric data, unlike passwords, cannot be changed if leaked or misused. When organizations collect facial data without informed consent, they risk breaching individual rights and violating legal obligations.

Systemic bias and discriminatory outcomes

Facial recognition systems have repeatedly been shown to misidentify women, older adults and people with darker skin tones at significantly higher rates. A 2019 study by the U.S. National Institute of Standards and Technology found African American and Asian women were among the most misidentified by commercial facial recognition algorithms.

These disparities are more than technical glitches. They have real-world consequences. In Detroit, Robert Williams, an African American man, was wrongfully arrested after being misidentified by a police facial recognition system.

In Canada, the Calgary Police Service began using facial recognition in 2014, but failed to submit a privacy impact assessment despite being advised to do so.

Without improved and more representative training data and oversight, facial recognition can unintentionally become ingrained in discrimination, especially when used in high-stakes contexts like criminal investigations.

Weak regulatory protections in Canada

Despite the growing adoption of facial recognition, Canada's legal tools for regulating it are limited. The primary law governing personal information in the private sector, the Personal Information Protection and Electronic Documents Act, was enacted in 2000, long before artificial intelligence and biometric surveillance were commonplace.

PIPEDA does not explicitly classify biometric data as sensitive, nor does it require organizations to conduct PIAs before deploying facial recognition systems. While the Office of the Privacy Commissioner of Canada has issued guidance on these topics, the law itself lacks binding provisions.

The situation is even more concerning in the public sector. The federal Privacy Act applies to government institutions but has not been substantially updated since 1982. It provides only limited safeguards and gives the OPC minimal enforcement powers. When the Royal Canadian Mounted Police used Clearview AI — a facial recognition tool built from scraped images across the internet — the OPC found it unlawful but was unable to compel data deletion or issue penalties.

Quebec leads, but national harmonization is needed

Quebec's privacy reforms under Law 25 offer a more modern and proactive model. The law requires organizations to conduct PIAs for biometric systems, obtain opt-in consent, and notify regulators before creating biometric databases. It also introduces meaningful enforcement, including administrative penalties of up to CAD25 million or 4% of global revenue.

While promising, Quebec's approach is not shared nationwide. Other provinces rely on PIPEDA or weaker frameworks, creating a patchwork of protections. Organizations may exploit this by rolling out invasive technologies in jurisdictions with fewer rules. This practice is known as forum shopping.

To ensure all Canadians enjoy equal privacy rights, Canada needs a harmonized, nationwide approach that draws from both Quebec's leadership and global standards like the European Union's General Data Protection Regulation.

Five recommendations for responsible regulation

As FRT becomes more embedded in everyday life, Canada stands at a crossroads. The challenge is no longer whether FRT will be used, rather, how to ensure it is deployed ethically, transparently and in a way that respects democratic values. Current privacy laws were not designed for the scale and complexity of real-time biometric surveillance. If left as-is, this regulatory gap risks enabling mass surveillance, algorithmic bias and growing economic inequality.

To move forward responsibly, Canada needs a modernized framework that does more than just respond to harms after the fact. It must anticipate risks, embed human rights into design, and ensure equitable outcomes across society. With that in mind, here are five recommendations to guide responsible regulation.

Establish clear regulations to prevent mass surveillance

Canada must prohibit the use of facial recognition in public spaces without oversight. People should not be tracked while shopping, attending protests or walking through a transit hub. Government and private-sector FRT use must be transparent, subject to strict limitations and require clear public notice and consent.

Legislation should also restrict real-time facial recognition surveillance unless it meets high legal thresholds and oversight requirements, such as a court warrant or review by an independent authority.

Implement algorithmic audits and bias mitigation

To reduce discriminatory outcomes, Canada should require algorithmic audits for all facial recognition systems. These audits must assess performance across various demographic groups and be conducted by independent third parties. Developers should also use diverse and representative datasets to train their models.

Promote workforce reskilling and support displaced workers

As facial recognition and related automation technologies replace roles in security, customer service and border control, governments must invest in retraining programs. Policies should support transitions into high-demand fields like cybersecurity, AI governance and privacy compliance.

Reskilling isn't just about protecting jobs — it's about ensuring the benefits of innovation are equitably distributed. Programs must prioritize access for lower-income workers, racialized communities and others who are most at risk of economic displacement.

Adopt international best practices for data protection

Canada should look to international frameworks like the GDPR for inspiration. For example, the GDPR mandates data minimization, purpose limitation, and strict requirements for processing biometric data.

Bill C-27, Canada's proposed privacy reform legislation, is a step forward. It includes provisions for recognizing biometric data as sensitive and grants the OPC new enforcement powers. However, it still lacks mandatory PIAs and real-time disclosure obligations for facial recognition.

Strengthen public education and stakeholder engagement

Many Canadians are unaware when or where they are being scanned by facial recognition systems. Policymakers should prioritize transparency and public education. Awareness campaigns should inform people of their rights and how biometric systems work.

Privacy regulators should also consult widely, not just with technology firms, but also with human rights groups, Indigenous communities, racial justice advocates and academic researchers. This inclusive approach will help ensure regulation reflects the values of Canadian society.

Conclusion

Canada faces a crucial moment in its history. Facial recognition technology is here but robust governance is not. If left unchecked, it could normalize surveillance, deepen inequality and erode public trust.

But with the right safeguards, Canada has an opportunity to lead. By harmonizing laws across the nation, empowering regulators and embedding equity into every stage of deployment, Canada can set a global example for how democratic societies can govern powerful technologies responsibly.

Facial recognition may be here to stay, but so too are the rights and freedoms society must always uphold.

Alvin Leenus is a third-year law student at the University of Ottawa's English Common Law Program and Kris Klein, CIPP/C, CIPM, FIP, is managing director of IAPP Canada and teaches privacy law at the University of Ottawa, Faculty of Law.