Privacy and digital health data: The femtech challenge

Published:

Contributors:

Amy Olivero

Associate in Cybersecurity and Privacy Practice

WilmerHale

The Dobbs v. Jackson Women's HealthSupreme Court decision has raised the stakes for privacy protections of health data in the U.S. By the end of the year, the femtech market — that is, digital tools such as mobile applications related to women's health — is estimated to be a $51.6 billion global market, more than a third of the total valuation of digital health. While the repercussions of gaps in U.S. digital health data protections extend well beyond women's health, the post-Dobbs privacy concerns in the femtech market highlight the complexities of today's health privacy protections and the ad tech ecosystem.

The U.S. Federal Trade Commission recently reiterated the agency will "vigorously enforce the law if we uncover illegal conduct that exploits Americans' location, health, or other sensitive data." This raises the importance for companies and privacy professionals to understand the shifted regulatory and enforcement landscape and how policymakers are thinking about potential future laws and agency rules.

Health-relevant data obligations in comprehensive state privacy laws

The Health Insurance Portability and Accountability Act, adopted in 1996, protects electronic health data that is generated, stored and transmitted only by delineated covered entities, which include health plans, health care clearing houses, and health care providers as well as their business associates. The 2009 Health Information Technology for Economic and Clinical Health Act, which updated certain provisions of HIPAA to align with evolving technology, continued the law's focus on the regulation of clinical electronic data.

These laws do not address health data created outside of a clinical context, creating a gap in digital health privacy protections that has continued to expand with the growth of mobile health apps and other technologies. Thus, HIPAA does not apply to a broader category of health-relevant data that is generated and/or stored by individuals in their personal devices and mobile apps or created via wearable fitness tracking devices. Likewise, sensitive information such as geolocation data and search/purchase online history that is collected and sold is largely unprotected by federal laws.

California, Colorado, Connecticut, Virginia, and Utah have started to address this gap in their comprehensive privacy laws. These laws have a HIPAA data exemption, so protected health information that is clinically generated may not need to comply with the requirements of the new state privacy obligations. However, other health-relevant data not under HIPAA is subject to the state protections and the requirements to comply with individual data rights to access, correct, delete, and opt out from the collection and/or sale of personal data established in the five comprehensive data privacy laws.

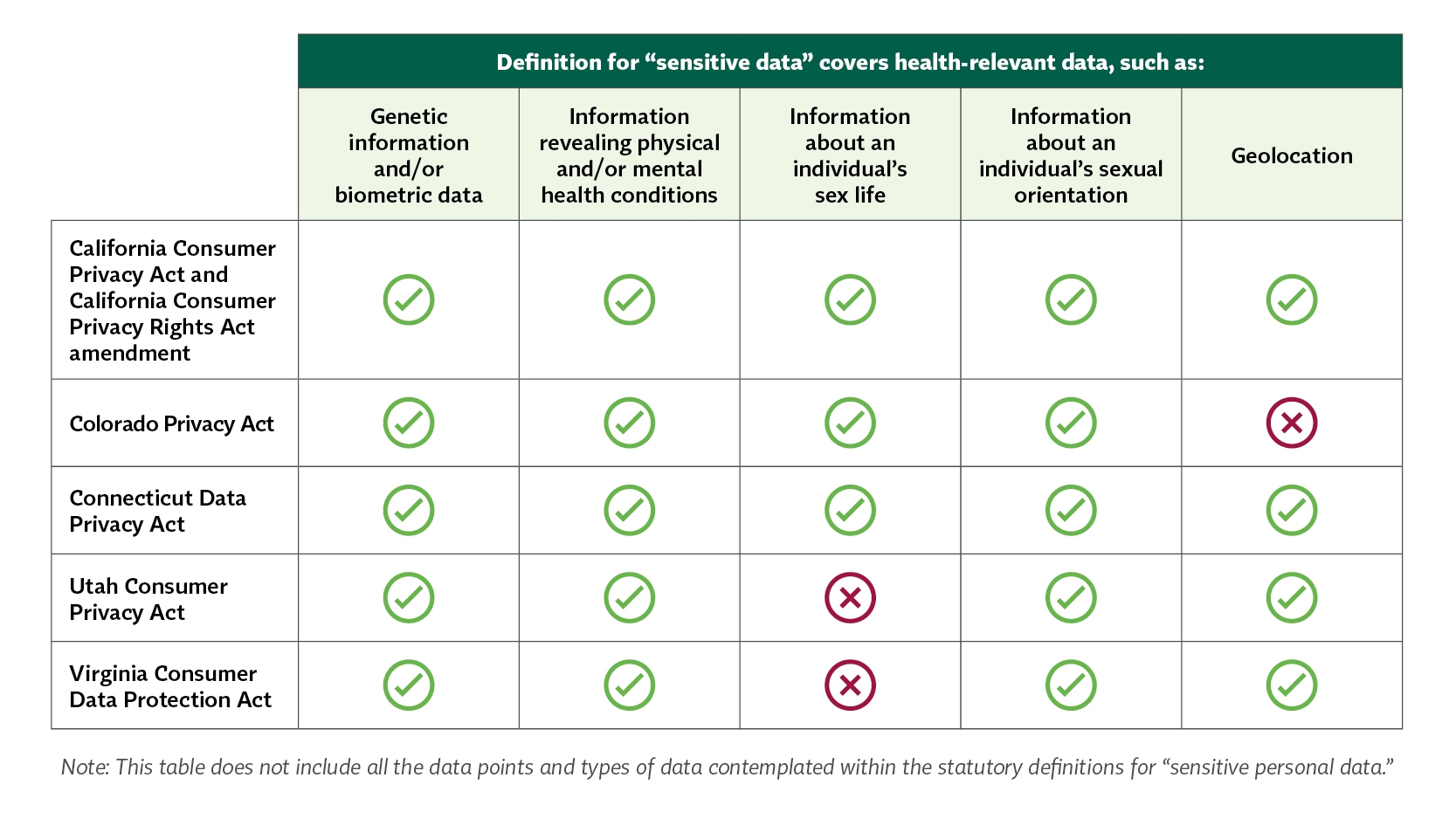

A key definition in each law is what health-relevant information is considered "sensitive personal data" that is subject to stricter requirements. These requirements aim to mitigate the risk of re-identification and harmful profiling that is possible through aggregated and publicly-available personal data. While some sensitive data categories are common across the five state laws, there are some slight differences in whether geolocation and information about an individual's sex life are considered sensitive data points, as illustrated in the table below:

Each state's heightened protections for sensitive data also differ. The CPRA has the most expansive definition for sensitive data and establishes an opt-out scheme for the processing of that data. Meanwhile, the Colorado, Connecticut and Virginia privacy laws contain more limited definitions but require consent before a data controller can process sensitive data. Utah's protections reflect both approaches by having a more limited definition of sensitive data but follow an opt-out scheme similar to California's process where the data controller must "present the consumer with clear notice and an opportunity to opt out of processing" rather than obtain consent.

State protections beyond comprehensive legislation

In addition to comprehensive data privacy legislation, health-relevant privacy protections can be found in some state constitutions and sector-specific laws.

First, two states —Missouri and Michigan— passed amendments to their state constitutions that provide specific protections and requirements for searches and seizures of electronic communications and data. These amendments and the U.S. Supreme Court ruling in Riley v. California, which established a warrant requirement for police searches of cellphones, took on new meaning after Roe v. Wade was overturned. Pro-choice advocates worried about the possible criminalization of women seeking abortion and other contraceptive services. These constitutional provisions may provide a basis for future legal challenges to law enforcement's current warrantless access to data from third-party brokers to gather potentially incriminating evidence held in mobile devices and electronic communications.

Second, some states are building privacy protection schemes in more targeted ways through legislation like data broker privacy laws. For example:

- California's law establishes a data broker registration.

- Nevadamandates consumers have the option to opt their personal data out of sales to third parties.

- Vermont requires data brokers register with the secretary of state and uphold a "duty to protect personally identifiable information," which includes biometric and genetic information and records from wellness programs and health professionals.

Third, the growing recognition and potential enforcement of global privacy controls and universal opt-out requests could impact the collection, retention and sale of health-relevant data via mobile apps. In statements about the settlement with Sephora for its alleged CCPA violation, California Attorney General Rob Bonta clarified the statute requires that businesses honor user-enabled global privacy controls. Similarly, the Colorado Privacy Act requires the state attorney general to develop regulations for how businesses should respond to universal opt-out requests by July 1, 2024.

Finally, California also enforces additional health-relevant privacy protections through its Genetic Information Privacy Act, which protects information collected through consumer genetic testing kits, and the Confidentiality of Medical Information Act. Although the text in the CMIA extends privacy obligations to "a provider of health care, health care service plan, or contractor," Bonta has asserted that the law can also apply to some mobile apps that use information intended to be used in the management of a health condition or derived from health care providers.

The state's settlement with Glow, as explained below, demonstrates this application. Notably, state legislators in California also introduced AB 1436 last year, which would extend CMIA protections for health data in direct-to-consumer technologies without needing a direct nexus to a health care provider or specific health condition. The proposed legislation passed out of the state assembly but is currently held under submission in the senate.

State attorney's general enforcement actions against mobile app developers and ad tech companies

The state laws and regulations for health-relevant data described above may now be enforced by state attorney's general around the country.

In 2020, California's attorney general sued Glow, a technology company that developed and operated a fertility-tracking mobile app, for violating the CMIA and two other laws. Glow was subject to the CMIA's heightened confidentiality and security requirements because it offered "software to consumers … designed to maintain medical information for the purposes of allowing its users to manage their information or for the diagnosis, treatment, or management of a medical condition."

California alleged that Glow failed to comply with the CMIA due to its password-change process vulnerabilities and a feature that allowed users to share their health data with another person without first properly authenticating the second person. The settlement included a $250,000 civil penalty and injunctive terms requiring the tech company to install more privacy and security measures, such as obtaining affirmative consent before disclosure to third parties, implementing employee training, and conducting an assessment on how weak privacy and security practices uniquely impact women.

State attorneys general have also enforced state consumer protection laws to protect health-relevant data. In 2017, the Massachusetts attorney general reached a settlement with marketing company Copley Advertising, preempting its use of geo-fencing technology to track consumers entering reproductive health clinics and then sell that data to third-party advertisers like pregnancy counseling organizations. Then, in 2020, Vermont sued Clearview AI for its data-scraping practices that used consumers' images online to develop facial recognition technology without their consent. The litigation is still ongoing.

The FTC's bolstered enforcement efforts to protect health-relevant data

Alongside this activity in the states, enforcement actions against health data app companies and data brokers have been increasing even faster at the federal level. In its complaint last year against Flo Health and its fertility tracking mobile app, the FTC alleged that Flo violated Section 5(a) of the FTC Act for "unfair or deceptive acts or practices affecting commerce" through its misrepresentations about consumers' privacy. The Commission charged that Flo's disclosure of user data — including on users' menstrual cycles, intention to get pregnant, and place of residence — to third parties without user consent contradicted its privacy policies. These policies assured users that:

- The app did not share data with third parties.

- Data would only be used for specific purposes.

- The app would only use non-personally identifiable information and tags.

The final settlement required Flo Health to cease the misrepresentations, obtain affirmative consent from users for its data-sharing practices, and obtain an independent review of its privacy practices.

Earlier this year, the U.S. Department of Justice, on behalf of the FTC, took action against WW International, the company better known as Weight Watchers, and a subsidiary for violating the Children's Online Privacy Protection Act Rule. The complaint alleged the company did not provide adequate notice about the information it collected through its website and app, did not take measures to prevent children under 13 from signing up without parental consent, and retained children's health information indefinitely unless expressly requested by a parent — a clear violation of COPPA. The settlement required WW International to delete all illegally collected children's health data, delete work product algorithms created using that data, and pay a $1.5 million fine for the violations.

Finally, in a more recent case filed against location data broker Kochava, the FTC alleged the company's method of using unique mobile device identification numbers and "timestamped latitude and longitude coordinates showing the location of mobile devices" in customizable data packages sold for advertising put consumers at significant privacy risk. The complaint argued this information enabled purchasers to identify and track individuals not only at their home addresses, but in other sensitive locations such as reproductive health clinics, places of worship and domestic violence shelters. The claim further alleged that data broker Kochava had not taken adequate measures to safeguard their data from public access.

Bills circulating around Capitol Hill forecast what could be ahead for digital health privacy

In addition to increased enforcement from the FTC, several federal bills containing health-relevant privacy protections have been introduced in Congress recently. The following bills each have bipartisan sponsors:

- S. 24 — The Protecting Personal Health Data Act: This proposed legislation recognizes the gap in privacy protections for health data not regulated under HIPAA and directs the Health and Human Services Secretary to promulgate regulations for new health technologies, including mobile apps and direct-to-consumer genetic testing kits, to strengthen and standardize the collection, use, and disclosure of health data in areas such as individual control and access, consent, third party transfers, and security. The bill also establishes a national task force on health data protection.

- S. 3620 — The Health Data Use and Privacy Commission Act: This bill proposes a commission that would draft recommendations for "the appropriate balance to be achieved between protecting individual privacy and allowing and advancing appropriate uses of personal health information." It states the commission would review the effectiveness of existing law and self-regulatory schemes in the private sector and analyze issues such as user consent, sale and transfer of personal health information, data use notices, and deidentification standards for health-relevant data in light of new technologies and increasing data collection.

- S. 3627, H.R. 6752— The Data Elimination and Limiting Extensive Tracking and Exchange (DELETE) Act: This bill represents another bipartisan effort for privacy regulations that would also extend to health-relevant data. The bill authorizes the FTC to establish a mandatory registry for data brokers and a centralized data deletion system for individuals to request that registered data brokers delete their information and request to be added to a "Do Not Track" list for data brokers.

These proposed bills offer valuable insight into how federal lawmakers are thinking about the balance between data privacy and easier access to health data and analysis outside of HIPAA.

Conclusion

In the more than 25 years since the passage of HIPAA, the explosion of health-related mobile apps, especially reproductive health and fertility apps, has triggered heightened privacy, law enforcement and civil rights concerns. State and federal entities are now responding to these concerns, as demonstrated through increased legislative efforts and enforcement actions. As Samuel Levine, the director of the FTC's Bureau of Consumer Protection, stated, "Where consumers seek out health care, receive counseling, or celebrate their faith is private information that shouldn't be sold to the highest bidder."

As the privacy laws and enforcement actions that protect consumers' digital health-relevant data continue to grow, developers should familiarize themselves with privacy and security requirements to adequately address privacy expectations and acceptable practices for future compliance.