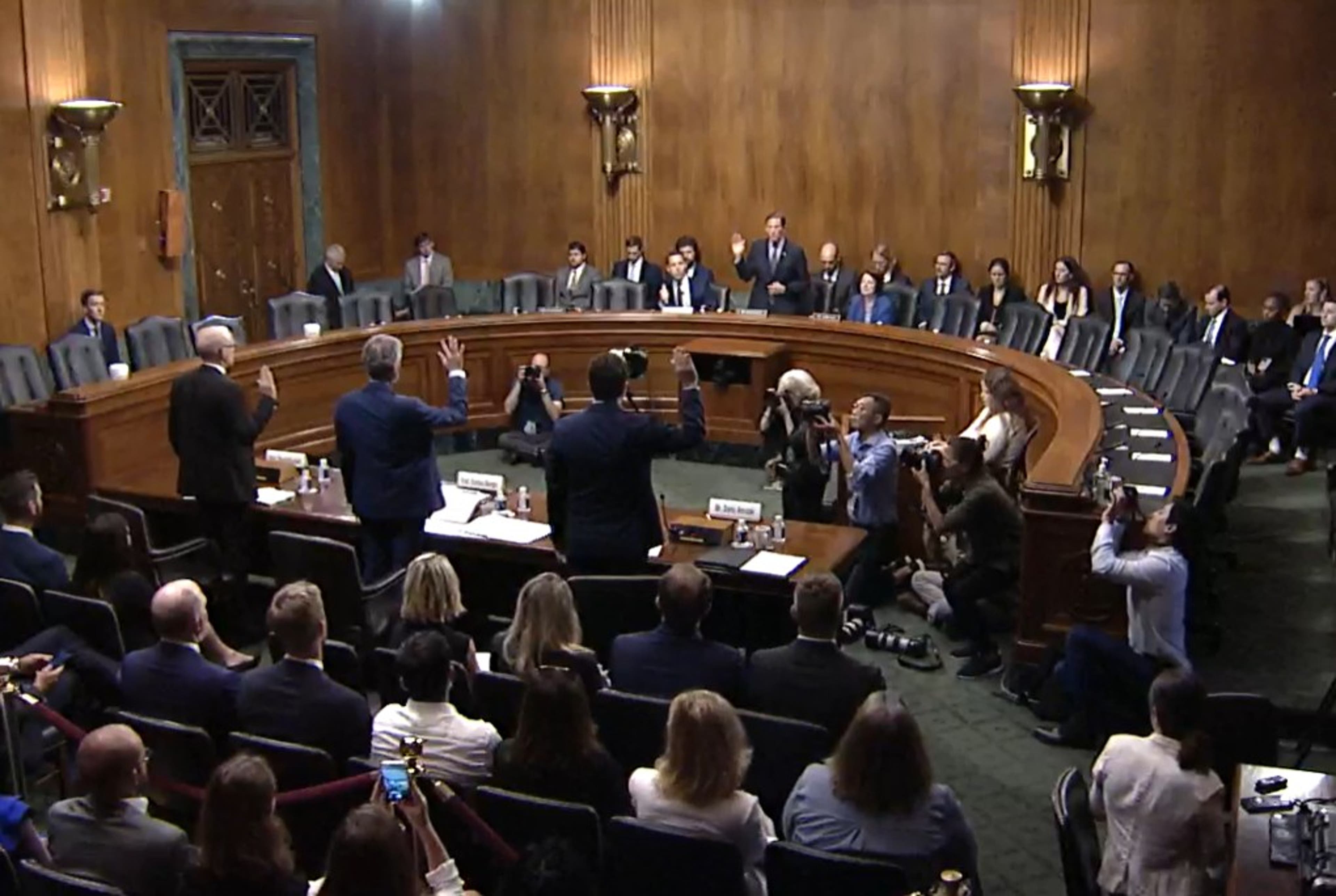

The U.S. Senate Committee on the Judiciary's Subcommittee on Privacy, Technology and the Law held another artificial intelligence probing hearing 25 July with leading AI academics and the founder of a public benefit AI research company, each of whom offered suggestions for how to potentially regulate AI in a variety of applications.

Working as a follow-up to the subcommittee's May hearing on the same matter, subcommittee chair Richard Blumenthal, D-Conn., said the objective of the latest meeting was to "lay the ground for (AI) legislation."

"To go from general principles to specific (legal) recommendations,” he said. “To use this hearing to write real, enforceable laws."

Panelists were pressed on a range of legal and national security concerns surrounding the development of AI, including privacy harms to citizens on an individual basis and macro global security issues.

Potential for substantial risks

Montreal Institute for Learning Algorithms founder and Scientific Director Yoshua Bengio said AI will soon achieve human-level intelligence and time is running out to establish the technical and legal framework to prevent the rise of "rogue" AI models.

"Advancements have led many top AI researchers to revise our estimates of when human-level intelligence could be achieved, which was previously thought to be decades or even centuries away. We now believe it could be within a few years or decades," Bengio said. "The shorter timeframe, say, five years is really worrisome, because we'll need more time to effectively mitigate the potentially significant threats to democracy, national security and our collective future."

"We could reduce the probability of a rogue AI showing up by maybe a factor of 100 if we do the right things in terms of regulation," he continued.

Anthropic CEO and co-founder Dario Amodei, said AI risks can be grouped into short, medium and long term. He said short-term risks include "bias, privacy and misinformation," and long-term risks could present themselves as a fully autonomous AI model that could "threaten humanity." Amodei's company was among seven Big Tech firms to recently made a voluntary commitment to U.S. President Joe Biden to conduct internal and external security testing of their AI systems before releasing them for commercial use.

During opening remarks, Blumenthal said President Biden’s effort to secure voluntary commitments from Big Tech to responsibly develop generative AI was not substantive enough to prevent negative outcomes, and the "inexorable" advancement of the technology requires regulatory oversight by a new federal agency.

Amodei added any regulation of AI and/or the federal agency tasked with enforcing rules on AI development should be empowered to evaluate the risk of any given AI model.

"This idea of being able to even measure that the risk is there is really the critical thing," Amodei said. “If we can't measure (risk), then we can put in place all of these regulatory apparatuses, but it'll all be a rubber stamp. So, funding for the measurement apparatus and the enforcement apparatus, working in concert is going to be central here."

Blumenthal also opened up about how personal fear of potential negative and dystopian outcomes of widespread AI adoption was exacerbated by the written testimony provided by each of Tuesday's witnesses.

"The nightmares are reinforced,” Blumenthal said, addressing the witnesses. "I've come to the conclusion that we need some kind of regulatory agency, but not just a reactive body, not just a passive rules-of-the-road maker … but actually investing proactively in research so we develop countermeasures against the kind of autonomous out-of-control scenarios that are potential dangers. ... The urgency here demands action."

Legislative suggestions

The subcommittee's work has already yielded draft AI legislation, as both Blumenthal and Hawley touted their "No Section 230 Immunity for AI Act," Tuesday. The bill would repeal the of the Communications Decency Act's Section 230 protections afforded to social media companies for AI technology and establish a private right of action for U.S. citizens to sue in the event their personal information is used to train an AI model.

Bengio suggested adding criminal penalties to reduce the likelihood of a bad actor using AI to fraudulently replicate a person's voice, image or name.

"If you think about counterfeiting money, the criminal penalties are very high, and that deters a lot of people," Bengio said. "When it comes to counterfeiting, humans, (the penalty) should be at least at the same level."

The other core legislative suggestions the three witnesses offered were prompted by Hawley asking them what legislative action Congress should undertake in short order. Each of the witnesses was in general consensus over the need to establish a federal regulatory agency specifically for AI.

Besides the creation of a federal agency, University of California Computer Science professor Stuart Russell said he supports required third-party testing of AI models, a licensing regime and an "international coordinating body." Other basic AI rules, he said should include humans having the right to know "if one is interacting with a person or a machine" and each model having a "kill switch."

"Analytic predictability is as essential for safe AI, as it is for the autopilot on an airplane," Russell said. "Go beyond the voluntary steps announced last Friday (at the White House). Systems that break the rules must be recalled from the market for anything from defaming real individuals to helping terrorists build biological weapons."

"Developers may argue that preventing these behaviors is too hard, because (large language models) have no notion of truth and are just trying to help," he added. "This is no excuse."

Bengio said the government should focus its regulatory efforts on limiting access to powerful AI systems by structuring the "incentives for them to act safely," and aligning AI systems with agreed values and norms.

"The potential for harm an AI system can cause can affect indirectly, for example, through human actions, or directly, for example, through the internet," Bengio said. "Looking at risks through the lens of the factors of access, alignment, intellectual power, and scope of actions is critical to designing appropriate government interventions."

Amodei said whatever enforcement mechanisms ultimately come into force, it must contain a comprehensive testing and auditing regime of AI models.

"These are complex machines. We need an enforcement mechanism that people are able to look at these machines and say what are the benefits of these, and what is the danger of this particular machine, as well as multiple machines in general," Amodei said. "I, personally, am open to whatever administrative mechanism puts those kinds of tests in place. I'm agnostic to whether it's a new agency or extending the authorities of existing agencies, but whatever we do, it has to happen fast."